Infinite-dimensional vector function

Infinite-dimensional vector function refers to a function whose values lie in an infinite-dimensional vector space, such as a Hilbert space or a Banach space.

Such functions are applied in most sciences including physics.

Example

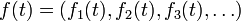

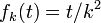

Set  for every positive integer k and every real number t. Then values of the function

for every positive integer k and every real number t. Then values of the function

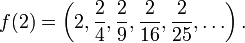

lie in the infinite-dimensional vector space X (or  ) of real-valued sequences. For example,

) of real-valued sequences. For example,

As a number of different topologies can be defined on the space X, we cannot talk about the derivative of f without first defining the topology of X or the concept of a limit in X.

Moreover, for any set A, there exist infinite-dimensional vector spaces having the (Hamel) dimension of the cardinality of A (e.g., the space of functions  with finitely-many nonzero elements, where K is the desired field of scalars). Furthermore, the argument t could lie in any set instead of the set of real numbers.

with finitely-many nonzero elements, where K is the desired field of scalars). Furthermore, the argument t could lie in any set instead of the set of real numbers.

Integral and derivative

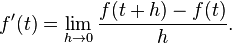

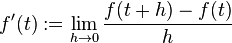

If, e.g., ![f:[0,1]\rightarrow X](../I/m/c706d9d5b3ec876a8349e3204ab59ee8.png) , where X is a Banach space or another topological vector space, the derivative of f can be defined in the standard way:

, where X is a Banach space or another topological vector space, the derivative of f can be defined in the standard way:  .

.

The measurability of f can be defined by a number of ways, most important of which are Bochner measurability and weak measurability.

The most important integrals of f are called Bochner integral (when X is a Banach space) and Pettis integral (when X is a topological vector space). Both these integrals commute with linear functionals. Also  spaces have been defined for such functions.

spaces have been defined for such functions.

Most theorems on integration and differentiation of scalar functions can be generalized to vector-valued functions, often using essentially the same proofs. Perhaps the most important exception is that absolutely continuous functions need not equal the integrals of their (a.e.) derivatives (unless, e.g., X is a Hilbert space); see Radon–Nikodym theorem

Derivative

Functions with values in a Hilbert space

If f is a function of real numbers with values in a Hilbert space X, then the derivative of f at a point t can be defined as in the finite-dimensional case:

Most results of the finite-dimensional case also hold in the infinite-dimensional case too, mutatis mutandis. Differentiation can also be defined to functions of several variables (e.g.,  or even

or even  , where Y is an infinite-dimensional vector space).

, where Y is an infinite-dimensional vector space).

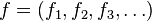

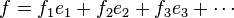

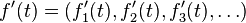

N.B. If X is a Hilbert space, then one can easily show that any derivative (and any other limit) can be computed componentwise: if

(i.e.,  , where

, where  is an orthonormal basis of the space X), and

is an orthonormal basis of the space X), and  exists, then

exists, then

.

.

However, the existence of a componentwise derivative does not guarantee the existence of a derivative, as componentwise convergence in a Hilbert space does not guarantee convergence with respect to the actual topology of the Hilbert space.

Other infinite-dimensional vector spaces

Most of the above hold for other topological vector spaces X too. However, not as many classical results hold in the Banach space setting, e.g., an absolutely continuous function with values in a suitable Banach space need not have a derivative anywhere. Moreover, in most Banach spaces setting there are no orthonormal bases.

References

- Einar Hille & Ralph Phillips: "Functional Analysis and Semi Groups", Amer. Math. Soc. Colloq. Publ. Vol. 31, Providence, R.I., 1957.