Radial basis function

A radial basis function (RBF) is a real-valued function whose value depends only on the distance from the origin, so that ; or alternatively on the distance from some other point c, called a center, so that . Any function that satisfies the property is a radial function. The norm is usually Euclidean distance, although other distance functions are also possible.

Sums of radial basis functions are typically used to approximate given functions. This approximation process can also be interpreted as a simple kind of neural network; this was the context in which they originally surfaced, in work by David Broomhead and David Lowe in 1988,[1][2] which stemmed from Michael J. D. Powell's seminal research from 1977.[3][4][5] RBFs are also used as a kernel in support vector classification.[6]

Types

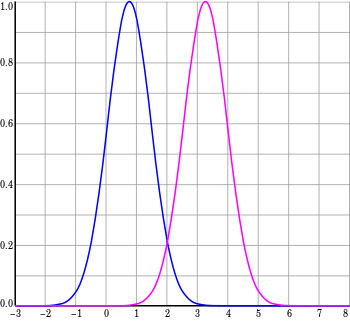

Commonly used types of radial basis functions include (writing ):

- Multiquadric:

- Inverse quadratic:

- Inverse multiquadric:

- Thin plate spline (a special polyharmonic spline):

Approximation

Radial basis functions are typically used to build up function approximations of the form

where the approximating function y(x) is represented as a sum of N radial basis functions, each associated with a different center xi, and weighted by an appropriate coefficient wi. The weights wi can be estimated using the matrix methods of linear least squares, because the approximating function is linear in the weights.

Approximation schemes of this kind have been particularly used in time series prediction and control of nonlinear systems exhibiting sufficiently simple chaotic behaviour, 3D reconstruction in computer graphics (for example, hierarchical RBF and Pose Space Deformation).

RBF Network

The sum

can also be interpreted as a rather simple single-layer type of artificial neural network called a radial basis function network, with the radial basis functions taking on the role of the activation functions of the network. It can be shown that any continuous function on a compact interval can in principle be interpolated with arbitrary accuracy by a sum of this form, if a sufficiently large number N of radial basis functions is used.

The approximant y(x) is differentiable with respect to the weights wi. The weights could thus be learned using any of the standard iterative methods for neural networks.

Using radial basis functions in this manner yields a reasonable interpolation approach provided that the fitting set has been chosen such that it covers the entire range systematically (equidistant data points are ideal). However, without a polynomial term that is orthogonal to the radial basis functions, estimates outside the fitting set tend to perform poorly.

See also

References

- ↑ Radial Basis Function networks

- ↑ Broomhead, David H.; Lowe, David (1988). "Multivariable Functional Interpolation and Adaptive Networks" (PDF). Complex Systems. 2: 321–355. Archived from the original (PDF) on 2014-07-14.

- ↑ Michael J. D. Powell (1977). "Restart procedures for the conjugate gradient method" (PDF). Mathematical Programming. Springer. 12 (1): 241–254. doi:10.1007/bf01593790.

- ↑ Sahin, Ferat (1997). A Radial Basis Function Approach to a Color Image Classification Problem in a Real Time Industrial Application (PDF) (M.Sc.). Virginia Tech. p. 26.

Radial basis functions were first introduced by Powell to solve the real multivariate interpolation problem.

- ↑ Broomhead & Lowe 1988, p. 347: "We would like to thank Professor M.J.D. Powell at the Department of Applied Mathematics and Theoretical Physics at Cambridge University for providing the initial stimulus for this work."

- ↑ VanderPlas, Jake (6 May 2015). "Introduction to Support Vector Machines". [O'Reilly]. Retrieved 14 May 2015.

Further reading

- Buhmann, Martin D. (2003), Radial Basis Functions: Theory and Implementations, Cambridge University Press, ISBN 978-0-521-63338-3.

- Hardy, R.L. (1971). "Multiquadric equations of topography and other irregular surfaces". Journal of Geophysical Research. 76 (8): 1905–1915. Bibcode:1971JGR....76.1905H. doi:10.1029/jb076i008p01905.

- Hardy, R.L. (1990). "Theory and applications of the multiquadric-biharmonic method, 20 years of Discovery, 1968 1988". Comp. math Applic. 19 (8/9): 163–208. doi:10.1016/0898-1221(90)90272-l.

- Press, WH; Teukolsky, SA; Vetterling, WT; Flannery, BP (2007), "Section 3.7.1. Radial Basis Function Interpolation", Numerical Recipes: The Art of Scientific Computing (3rd ed.), New York: Cambridge University Press, ISBN 978-0-521-88068-8

- Sirayanone, S., 1988, Comparative studies of kriging, multiquadric-biharmonic, and other methods for solving mineral resource problems, PhD. Dissertation, Dept. of Earth Sciences,Iowa State University, Ames, Iowa.

- Sirayanone, S.; Hardy, R.L. (1995). "The Multiquadric-biharmonic Method as Used for Mineral Resources, Meteorological, and Other Applications". Journal of Applied Sciences and Computations. 1: 437–475.