Two-dimensional singular value decomposition

Two-dimensional singular value decomposition (2DSVD) computes the low-rank approximation of a set of matrices such as 2D images or weather maps in a manner almost identical to SVD (singular value decomposition) which computes the low-rank approximation of a single matrix (or a set of 1D vectors).

SVD

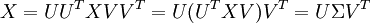

Let matrix  contains the set of 1D vectors which have been centered. In PCA/SVD, we construct

covariance matrix

contains the set of 1D vectors which have been centered. In PCA/SVD, we construct

covariance matrix  and Gram matrix

and Gram matrix

-

,

,  ,

,

and compute their eigenvectors  and

and

.

Since

.

Since  , we have

, we have

If we retain only  principal eigenvectors in

principal eigenvectors in  ,

this gives low-rank approximation of

,

this gives low-rank approximation of  .

.

2DSVD

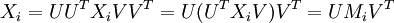

Here we deal with a set of 2D matrices  .

Suppose they are centered

.

Suppose they are centered  .

We construct row–row and column–column covariance matrices

.

We construct row–row and column–column covariance matrices

-

,

,

in exactly the same manner as in SVD, and compute their eigenvectors  and

and  .

We approximate

.

We approximate  as

as

in identical fashion as in SVD.

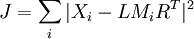

This gives a near optimal low-rank approximation of  with the objective function

with the objective function

Error bounds similar to Eckard-Young Theorem also exist.

2DSVD is mostly used in image compression and representation.

References

- Chris Ding and Jieping Ye. "Two-dimensional Singular Value Decomposition (2DSVD) for 2D Maps and Images". Proc. SIAM Int'l Conf. Data Mining (SDM'05), pp. 32–43, April 2005. http://ranger.uta.edu/~chqding/papers/2dsvdSDM05.pdf

- Jieping Ye. "Generalized Low Rank Approximations of Matrices". Machine Learning Journal. Vol. 61, pp. 167—191, 2005.