Language processing in the brain

Language processing refers to the way humans use words to communicate ideas and feelings, and how such communications are processed and understood. Thus it is how the brain creates and understands language. Most recent theories consider that this process is carried out entirely by and inside the brain.

This is considered one of the most characteristic abilities of the human species - perhaps the most characteristic. However very little is known about it and there is huge scope for research on it. This part of the brain also enhances the way linguistic learners learn and think.

Most of the knowledge acquired to date on the subject has come from patients who have suffered some type of significant head injury, whether external (wounds, bullets) or internal (strokes, tumors, degenerative diseases).

Studies have shown that most of the language processing functions are carried out in the cerebral cortex. The essential function of the cortical language areas is symbolic representation. Even though language exists in different forms, all of them are based on symbolic representation.[1]

Neural structures subserving language processing

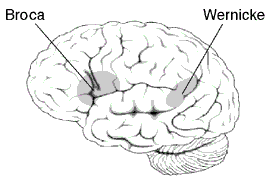

Much of the language function is processed in several association areas, and there are two well-identified areas that are considered vital for human communication: Wernicke's area and Broca's area. These areas are usually located in the dominant hemisphere (the left hemisphere in 97% of people) and are considered the most important areas for language processing. This is why language is considered a localized and lateralized function.[2]

However, the less-dominant hemisphere also participates in this cognitive function, and there is ongoing debate on the level of participation of the less-dominant areas.[3]

Other factors are believed to be relevant to language processing and verbal fluency, such as cortical thickness, participation of prefrontal areas of the cortex, and communication between right and left hemispheres.

Wernicke's area

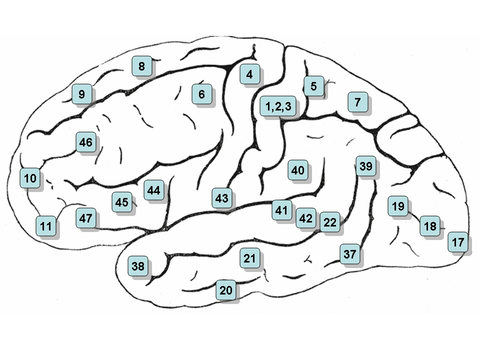

Wernicke's area is classically located in the posterior section of the superior temporal gyrus of the dominant hemisphere (Brodmann area 22), with some branches extending around the posterior section of the lateral sulcus, in the parietal lobe.[4]

Considering its position, Wernicke's area is located relatively between the auditory cortex and the visual cortex. The former is located in the transverse temporal gyrus (Brodmann areas 41 and 42), in the temporal lobe, while the latter is located in the posterior section of the occipital lobe (Brodmann areas 17, 18 and 19).[4]

While the dominant hemisphere is in charge of most of language comprehension, recent studies have demonstrated that the less dominant (right hemisphere in 97% of people) homologous area participates in the comprehension of ambiguous words, whether they are written or heard.[5]

Receptive speech has traditionally been associated with Wernicke's area of the posterior superior temporal gyrus (STG) and surrounding areas. Current models of speech perception include greater Wernicke's area, but also implicate a "dorsal" stream that includes regions also involved in speech motor processing.[6]

First identified by Carl Wernicke in 1874, its main function is the comprehension of language and the ability to communicate coherent ideas, whether the language is vocal, written, signed.[2]

Broca's area

Broca's area is usually formed by the pars triangularis and the pars opercularis of the inferior frontal gyrus (Brodmann areas 44 and 45). It follows Wernicke's area, and as such they both are usually located in the left hemisphere of the brain.[4]

Broca's area is involved mostly in the production of speech. Given its proximity to the motor cortex, neurons from Broca's area send signals to the larynx, tongue and mouth motor areas, which in turn send the signals to the corresponding muscles, thus allowing the creation of sounds.[4]

A recent analysis of the specific roles of these sections of the left inferior frontal gyrus in verbal fluency indicates that Brodmann area 44 (pars opercularis) may subserve phonological fluency, whereas the Brodmann area 45 (pars triangularis) may be more involved in semantic fluency.[7]

Arcuate fasciculus

The arcuate fasciculus is the area of the brain between Wernicke's area and Broca's area that connects the two through bundles of nerve fibers. This portion of the brain serves as a transit center between the two areas dealing most largely with speech and communication.[8]

Cortical thickness and verbal fluency

Recent studies have shown that the rate of increase in raw vocabulary fluency was positively correlated with the rate of cortical thinning. In other words, greater performance improvements were associated with greater thinning. This is more evident in left hemisphere regions, including the left lateral dorsal frontal and left lateral parietal regions: the usual locations of Broca's area and Wernicke's area, respectively.[7]

After Sowell's studies, it was hypothesized that increased performance on the verbal fluency test would correlate with decreased cortical thickness in regions that have been associated with language: the middle and superior temporal cortex, the temporal–parietal junction, and inferior and middle frontal cortex. Additionally, other areas related to sustained attention for executive tasks were also expected to be affected by cortical thinning.[7]

One theory for the relation between cortical thinning and improved language fluency is the effect that synaptic pruning has in signaling between neurons. If cortical thinning reflects synaptic pruning, then pruning may occur relatively early for language-based abilities. The functional benefit would be a tightly honed neural system that is impervious to "neural interference", avoiding undesired signals running through the neurons which could possibly worsen verbal fluency.[7]

The strongest correlations between language fluency and cortical thicknesses were found in the temporal lobe and temporal–parietal junction. Significant correlations were also found in the auditory cortex, the somatosensory cortex related to the organs responsible for speech (lips, tongue and mouth), and frontal and parietal regions related to attention and performance monitoring. The frontal and parietal regions are also evident in the right hemisphere.[7]

Oral language

Speech perception

Acoustic stimuli are received by the auditive organ and are converted to bioelectric signals on the organ of Corti. These electric impulses are then transported through scarpa's ganglion (vestibulocochlear nerve) to the primary auditory cortex, on both hemispheres. Each hemisphere treats it differently, nevertheless: while the left side recognizes distinctive parts such as phonemes, the right side takes over prosodic characteristics and melodic information.

The signal is then transported to Wernicke's area on the left hemisphere (the information that was being processed on the right hemisphere is able to cross through inter-hemispheric axons), where the already noted analysis takes part.

During speech comprehension, activations are focused in and around Wernicke's area. A large body of evidence supports a role for the posterior superior temporal gyrus in acoustic–phonetic aspects of speech processing, whereas more ventral sites such as the posterior middle temporal gyrus (pMTG) are thought to play a higher linguistic role linking the auditory word form to broadly distributed semantic knowledge.[6]

Also, the pMTG site shows significant activation during the semantic association interval of the verb generation and picture naming tasks, in contrast to the pSTG sites that remain at or below baseline levels during this interval. This is consistent with a greater lexical–semantic role for pMTG relative to a more acoustic–phonetic role for pSTG.[6]

Semantic association

Early auditory processing and word recognition take place in inferior temporal areas ("what" pathway), where the signal arrives from the primary and secondary visual cortices. The representation of the object in the "what" pathway and nearby inferior temporal areas itself constitutes a major aspect of the conceptual–semantic representation. Additional semantic and syntactic associations are also activated, and during this interval of highly variable duration (depending on the subject, the difficulty of the current object, etc.), the word to be spoken is selected. This involves some of the same sites – prefrontal cortex (PFC), supramarginal gyrus (SMG), and other association areas – involved in the semantic selection stage of verb generation.[6]

Speech production

From Wernicke's area, the signal is taken to Broca's area through the arcuate fasciculus. Speech production activations begin prior to verbal response in the peri-Rolandic cortices (pre- and postcentral gyri). The role of ventral peri-Rolandic cortices in speech motor functions has long been appreciated (Broca's area). The superior portion of the ventral premotor cortex also exhibited auditory responses preferential to speech stimuli and are part of the dorsal stream.[6]

Involvement of Wernicke's area in speech production has been suggested and recent studies document the participation of traditional Wernicke's area (mid-to posterior superior temporal gyrus) only in post-response auditory feedback, while demonstrating a clear pre-response activation from the nearby temporal-parietal junction (TPJ).[6]

It is believed that the common route to speech production is through verbal and phonological working memory using the same dorsal stream areas (temporal-parietal junction, sPMv) implicated in speech perception and phonological working memory. The observed pre-response activations at these dorsal stream sites are suggested to subserve phonological encoding and its translation to the articulatory score for speech. Post-response Wernicke's activations, on the other hand, are involved strictly in auditory self-monitoring.[6]

Several authors support a model in which the route to speech production runs essentially in reverse of speech perception, as in going from conceptual level to word form to phonological representation.[6]

Aphasia

The acquired language disorders that are associated to brain activity are called aphasias. Depending on the location of the damage, the aphasias can present several differences.

The aphasias listed below are examples of acute aphasias which can result from brain injury or stroke.

- Expressive aphasia: Usually characterized as a nonfluent aphasia, this language disorder is present when injury or damage occurs to or near Broca's area. Individuals with this disorder have a hard time reproducing speech, although most of their cognitive functions remain intact, and are still able to understand language. They frequently omit small words. They are aware of their language disorder and may get frustrated.[9]

- Receptive aphasia: Individuals with receptive aphasia are able to produce speech without a problem. However, most of the words they produce lack coherence. At the same time, they have a hard time understanding what others try to communicate. They are often unaware of their mistakes. As in the case with expressive aphasia, this disorder happens when damage occurs to Wernicke's area.[10]

- Conduction aphasia: Characterized by poor speech repetition, this disorder is rather uncommon and happens when branches of the arcuate fasciculus are damaged.[11] Auditory perception is practically intact, and speech generation is maintained. Patients with this disorder will be aware of their errors, and will show significant difficulty correcting them.[12][13]

See also

References

- ↑ Pinker, Steven (1994). The language instinct : how the mind creates language. New York: W. Morrow and Co. ISBN 0-688-12141-1. OCLC 750573781.

- 1 2 Fitzpatrick, David; Purves, Dale; Augustine, George (2004). "27 Language and Lateralization". Neuroscience. Sunderland, Mass: Sinauer. ISBN 0-87893-725-0. OCLC 443194765.

- ↑ Manenti R, Cappa SF, Rossini PM, Miniussi C (March 2008). "The role of the prefrontal cortex in sentence comprehension: an rTMS study". Cortex. 44 (3): 337–44. doi:10.1016/j.cortex.2006.06.006. PMID 18387562.

- 1 2 3 4 Snell, Richard S. (2003). Neuroanatomia Clinica (Spanish Edition). Editorial Medica Panamericana. ISBN 950-06-2049-9. OCLC 806507296.

- ↑ Harpaz Y, Levkovitz Y, Lavidor M (October 2009). "Lexical ambiguity resolution in Wernicke's area and its right homologue". Cortex. 45 (9): 1097–103. doi:10.1016/j.cortex.2009.01.002. PMID 19251255.

- 1 2 3 4 5 6 7 8 Edwards E, Nagarajan SS, Dalal SS, et al. (March 2010). "Spatiotemporal imaging of cortical activation during verb generation and picture naming". NeuroImage. 50 (1): 291–301. doi:10.1016/j.neuroimage.2009.12.035. PMC 2957470

. PMID 20026224.

. PMID 20026224. - 1 2 3 4 5 Porter JN, Collins PF, Muetzel RL, Lim KO, Luciana M (April 2011). "Associations between cortical thickness and verbal fluency in childhood, adolescence, and young adulthood". NeuroImage. 55 (4): 1865–77. doi:10.1016/j.neuroimage.2011.01.018. PMC 3063407

. PMID 21255662.

. PMID 21255662. - ↑ Wilson, editors, Vedrana Mihalic̆ek, Christin (2011). Language files : materials for an introduction to language and linguistics (11th ed. ed.). Columbus: Ohio State University Press. p. 359. ISBN 9780814251799.

- ↑ Bakheit AM, Shaw S, Carrington S, Griffiths S (October 2007). "The rate and extent of improvement with therapy from the different types of aphasia in the first year after stroke". Clin Rehabil. 21 (10): 941–9. doi:10.1177/0269215507078452. PMID 17981853.

- ↑ Hébert S, Racette A, Gagnon L, Peretz I (August 2003). "Revisiting the dissociation between singing and speaking in expressive aphasia". Brain. 126 (Pt 8): 1838–50. doi:10.1093/brain/awg186. PMID 12821526.

- ↑ Bernal B, Ardila A (September 2009). "The role of the arcuate fasciculus in conduction aphasia". Brain. 132 (Pt 9): 2309–16. doi:10.1093/brain/awp206. PMID 19690094.

- ↑ Damasio H, Damasio AR (June 1980). "The anatomical basis of conduction aphasia". Brain. 103 (2): 337–50. doi:10.1093/brain/103.2.337. PMID 7397481.

- ↑ Buchsbaum BR, Baldo J, Okada K, et al. (December 2011). "Conduction aphasia, sensory-motor integration, and phonological short-term memory - an aggregate analysis of lesion and fMRI data". Brain Lang. 119 (3): 119–28. doi:10.1016/j.bandl.2010.12.001. PMC 3090694

. PMID 21256582.

. PMID 21256582.