Cryptographic hash function

A cryptographic hash function is a special class of hash function that has certain properties which make it suitable for use in cryptography. It is a mathematical algorithm that maps data of arbitrary size to a bit string of a fixed size (a hash function) which is designed to also be a one-way function, that is, a function which is infeasible to invert. The only way to recreate the input data from an ideal cryptographic hash function's output is to attempt a brute-force search of possible inputs to see if they produce a match. Bruce Schneier has called one-way hash functions "the workhorses of modern cryptography".[1] The input data is often called the message, and the output (the hash value or hash) is often called the message digest or simply the digest.

The ideal cryptographic hash function has five main properties:

- it is deterministic so the same message always results in the same hash

- it is quick to compute the hash value for any given message

- it is infeasible to generate a message from its hash value except by trying all possible messages

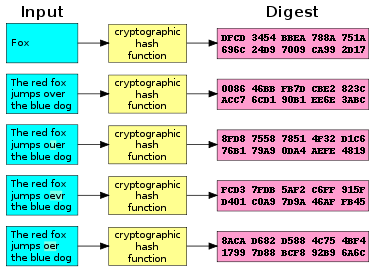

- a small change to a message should change the hash value so extensively that the new hash value appears uncorrelated with the old hash value

- it is infeasible to find two different messages with the same hash value

Cryptographic hash functions have many information-security applications, notably in digital signatures, message authentication codes (MACs), and other forms of authentication. They can also be used as ordinary hash functions, to index data in hash tables, for fingerprinting, to detect duplicate data or uniquely identify files, and as checksums to detect accidental data corruption. Indeed, in information-security contexts, cryptographic hash values are sometimes called (digital) fingerprints, checksums, or just hash values, even though all these terms stand for more general functions with rather different properties and purposes.

Properties

Most cryptographic hash functions are designed to take a string of any length as input and produce a fixed-length hash value.

A cryptographic hash function must be able to withstand all known types of cryptanalytic attack. In theoretical cryptography, the security level of a cryptographic hash function has been defined using the following properties:

- Pre-image resistance

- Given a hash value h it should be difficult to find any message m such that h = hash(m). This concept is related to that of one-way function. Functions that lack this property are vulnerable to preimage attacks.

- Second pre-image resistance

- Given an input m1 it should be difficult to find different input m2 such that hash(m1) = hash(m2). Functions that lack this property are vulnerable to second-preimage attacks.

- Collision resistance

- It should be difficult to find two different messages m1 and m2 such that hash(m1) = hash(m2). Such a pair is called a cryptographic hash collision. This property is sometimes referred to as strong collision resistance. It requires a hash value at least twice as long as that required for preimage-resistance; otherwise collisions may be found by a birthday attack.[2]

These properties form a hierarchy, in that collision resistance implies second pre-image resistance, which in turns implies pre-image resistance, while the converse is not true in general. [3] The weaker assumption is always preferred in theoretical cryptography, but in practice, a hash-functions which is only second pre-image resistant is considered insecure and is therefore not recommended for real applications.

Informally, these properties mean that a malicious adversary cannot replace or modify the input data without changing its digest. Thus, if two strings have the same digest, one can be very confident that they are identical.

A function meeting these criteria may still have undesirable properties. Currently popular cryptographic hash functions are vulnerable to length-extension attacks: given hash(m) and len(m) but not m, by choosing a suitable m' an attacker can calculate hash(m || m') where || denotes concatenation.[4] This property can be used to break naive authentication schemes based on hash functions. The HMAC construction works around these problems.

In practice, collision resistance is insufficient for many practical uses. In addition to collision resistance, it should be impossible for an adversary to find two messages with substantially similar digests; or to infer any useful information about the data, given only its digest. In particular, should behave as much as possible like a random function (often called a random oracle in proofs of security) while still being deterministic and efficiently computable. This rules out functions like the SWIFFT function, which can be rigorously proven to be collision resistant assuming that certain problems on ideal lattices are computationally difficult, but as a linear function, does not satisfy these additional properties.[5]

Checksum algorithms, such as CRC32 and other cyclic redundancy checks, are designed to meet much weaker requirements, and are generally unsuitable as cryptographic hash functions. For example, a CRC was used for message integrity in the WEP encryption standard, but an attack was readily discovered which exploited the linearity of the checksum.

Degree of difficulty

In cryptographic practice, "difficult" generally means "almost certainly beyond the reach of any adversary who must be prevented from breaking the system for as long as the security of the system is deemed important". The meaning of the term is therefore somewhat dependent on the application, since the effort that a malicious agent may put into the task is usually proportional to his expected gain. However, since the needed effort usually grows very quickly with the digest length, even a thousand-fold advantage in processing power can be neutralized by adding a few dozen bits to the latter.

For messages selected from a limited set of messages, for example passwords or other short messages, it can be feasible to invert a hash by trying all possible messages in the set. Because cryptographic hash functions are typically designed to be computed quickly, special key derivation functions that require greater computing resources have been developed that make such brute force attacks more difficult.

In some theoretical analyses "difficult" has a specific mathematical meaning, such as "not solvable in asymptotic polynomial time". Such interpretations of difficulty are important in the study of provably secure cryptographic hash functions but do not usually have a strong connection to practical security. For example, an exponential time algorithm can sometimes still be fast enough to make a feasible attack. Conversely, a polynomial time algorithm (e.g., one that requires n20 steps for n-digit keys) may be too slow for any practical use.

Illustration

An illustration of the potential use of a cryptographic hash is as follows: Alice poses a tough math problem to Bob and claims she has solved it. Bob would like to try it himself, but would yet like to be sure that Alice is not bluffing. Therefore, Alice writes down her solution, computes its hash and tells Bob the hash value (whilst keeping the solution secret). Then, when Bob comes up with the solution himself a few days later, Alice can prove that she had the solution earlier by revealing it and having Bob hash it and check that it matches the hash value given to him before. (This is an example of a simple commitment scheme; in actual practice, Alice and Bob will often be computer programs, and the secret would be something less easily spoofed than a claimed puzzle solution).

Applications

Verifying the integrity of files or messages

An important application of secure hashes is verification of message integrity. Determining whether any changes have been made to a message (or a file), for example, can be accomplished by comparing message digests calculated before, and after, transmission (or any other event).

For this reason, most digital signature algorithms only confirm the authenticity of a hashed digest of the message to be "signed". Verifying the authenticity of a hashed digest of the message is considered proof that the message itself is authentic.

MD5, SHA1, or SHA2 hashes are sometimes posted along with files on websites or forums to allow verification of integrity.[6] This practice establishes a chain of trust so long as the hashes are posted on a site authenticated by HTTPS.

Password verification

A related application is password verification (first invented by Roger Needham). Storing all user passwords as cleartext can result in a massive security breach if the password file is compromised. One way to reduce this danger is to only store the hash digest of each password. To authenticate a user, the password presented by the user is hashed and compared with the stored hash. (Note that this approach prevents the original passwords from being retrieved if forgotten or lost, and they have to be replaced with new ones.) The password is often concatenated with a random, non-secret salt value before the hash function is applied. The salt is stored with the password hash. Because users have different salts, it is not feasible to store tables of precomputed hash values for common passwords. Key stretching functions, such as PBKDF2, Bcrypt or Scrypt, typically use repeated invocations of a cryptographic hash to increase the time required to perform brute force attacks on stored password digests.

In 2013 a long-term Password Hashing Competition was announced to choose a new, standard algorithm for password hashing.[7]

Proof-of-work

A proof-of-work system (or protocol, or function) is an economic measure to deter denial of service attacks and other service abuses such as spam on a network by requiring some work from the service requester, usually meaning processing time by a computer. A key feature of these schemes is their asymmetry: the work must be moderately hard (but feasible) on the requester side but easy to check for the service provider. One popular system — used in Bitcoin mining and Hashcash — uses partial hash inversions to prove that work was done, as a good-will token to send an e-mail. The sender is required to find a message whose hash value begins with a number of zero bits. The average work that sender needs to perform in order to find a valid message is exponential in the number of zero bits required in the hash value, while the recipient can verify the validity of the message by executing a single hash function. For instance, in Hashcash, a sender is asked to generate a header whose 160 bit SHA-1 hash value has the first 20 bits as zeros. The sender will on average have to try 219 times to find a valid header.

File or data identifier

A message digest can also serve as a means of reliably identifying a file; several source code management systems, including Git, Mercurial and Monotone, use the sha1sum of various types of content (file content, directory trees, ancestry information, etc.) to uniquely identify them. Hashes are used to identify files on peer-to-peer filesharing networks. For example, in an ed2k link, an MD4-variant hash is combined with the file size, providing sufficient information for locating file sources, downloading the file and verifying its contents. Magnet links are another example. Such file hashes are often the top hash of a hash list or a hash tree which allows for additional benefits.

One of the main applications of a hash function is to allow the fast look-up of a data in a hash table. Being hash functions of a particular kind, cryptographic hash functions lend themselves well to this application too.

However, compared with standard hash functions, cryptographic hash functions tend to be much more expensive computationally. For this reason, they tend to be used in contexts where it is necessary for users to protect themselves against the possibility of forgery (the creation of data with the same digest as the expected data) by potentially malicious participants.

Pseudorandom generation and key derivation

Hash functions can also be used in the generation of pseudorandom bits, or to derive new keys or passwords from a single secure key or password.

Hash functions based on block ciphers

There are several methods to use a block cipher to build a cryptographic hash function, specifically a one-way compression function.

The methods resemble the block cipher modes of operation usually used for encryption. Many well-known hash functions, including MD4, MD5, SHA-1 and SHA-2 are built from block-cipher-like components designed for the purpose, with feedback to ensure that the resulting function is not invertible. SHA-3 finalists included functions with block-cipher-like components (e.g., Skein, BLAKE) though the function finally selected, Keccak, was built on a cryptographic sponge instead.

A standard block cipher such as AES can be used in place of these custom block ciphers; that might be useful when an embedded system needs to implement both encryption and hashing with minimal code size or hardware area. However, that approach can have costs in efficiency and security. The ciphers in hash functions are built for hashing: they use large keys and blocks, can efficiently change keys every block, and have been designed and vetted for resistance to related-key attacks. General-purpose ciphers tend to have different design goals. In particular, AES has key and block sizes that make it nontrivial to use to generate long hash values; AES encryption becomes less efficient when the key changes each block; and related-key attacks make it potentially less secure for use in a hash function than for encryption.

Merkle–Damgård construction

A hash function must be able to process an arbitrary-length message into a fixed-length output. This can be achieved by breaking the input up into a series of equal-sized blocks, and operating on them in sequence using a one-way compression function. The compression function can either be specially designed for hashing or be built from a block cipher. A hash function built with the Merkle–Damgård construction is as resistant to collisions as is its compression function; any collision for the full hash function can be traced back to a collision in the compression function.

The last block processed should also be unambiguously length padded; this is crucial to the security of this construction. This construction is called the Merkle–Damgård construction. Most widely used hash functions, including SHA-1 and MD5, take this form.

The construction has certain inherent flaws, including length-extension and generate-and-paste attacks, and cannot be parallelized. As a result, many entrants in the recent NIST hash function competition were built on different, sometimes novel, constructions.

Use in building other cryptographic primitives

Hash functions can be used to build other cryptographic primitives. For these other primitives to be cryptographically secure, care must be taken to build them correctly.

Message authentication codes (MACs) (also called keyed hash functions) are often built from hash functions. HMAC is such a MAC.

Just as block ciphers can be used to build hash functions, hash functions can be used to build block ciphers. Luby-Rackoff constructions using hash functions can be provably secure if the underlying hash function is secure. Also, many hash functions (including SHA-1 and SHA-2) are built by using a special-purpose block cipher in a Davies-Meyer or other construction. That cipher can also be used in a conventional mode of operation, without the same security guarantees. See SHACAL, BEAR and LION.

Pseudorandom number generators (PRNGs) can be built using hash functions. This is done by combining a (secret) random seed with a counter and hashing it.

Some hash functions, such as Skein, Keccak, and RadioGatún output an arbitrarily long stream and can be used as a stream cipher, and stream ciphers can also be built from fixed-length digest hash functions. Often this is done by first building a cryptographically secure pseudorandom number generator and then using its stream of random bytes as keystream. SEAL is a stream cipher that uses SHA-1 to generate internal tables, which are then used in a keystream generator more or less unrelated to the hash algorithm. SEAL is not guaranteed to be as strong (or weak) as SHA-1. Similarly, the key expansion of the HC-128 and HC-256 stream ciphers makes heavy use of the SHA256 hash function.

Concatenation

Concatenating outputs from multiple hash functions provides collision resistance as good as the strongest of the algorithms included in the concatenated result. For example, older versions of Transport Layer Security (TLS) and Secure Sockets Layer (SSL) use concatenated MD5 and SHA-1 sums.[8][9] This ensures that a method to find collisions in one of the hash functions does not defeat data protected by both hash functions.

For Merkle–Damgård construction hash functions, the concatenated function is as collision-resistant as its strongest component, but not more collision-resistant. Antoine Joux observed that 2-collisions lead to n-collisions: If it is feasible for an attacker to find two messages with the same MD5 hash, the attacker can find as many messages as the attacker desires with identical MD5 hashes with no greater difficulty.[10] Among the n messages with the same MD5 hash, there is likely to be a collision in SHA-1. The additional work needed to find the SHA-1 collision (beyond the exponential birthday search) requires only polynomial time.[11][12]

Cryptographic hash algorithms

There is a long list of cryptographic hash functions, although many have been found to be vulnerable and should not be used. Even if a hash function has never been broken, a successful attack against a weakened variant may undermine the experts' confidence and lead to its abandonment. For instance, in August 2004 weaknesses were found in several then-popular hash functions, including SHA-0, RIPEMD, and MD5. These weaknesses called into question the security of stronger algorithms derived from the weak hash functions—in particular, SHA-1 (a strengthened version of SHA-0), RIPEMD-128, and RIPEMD-160 (both strengthened versions of RIPEMD). Neither SHA-0 nor RIPEMD are widely used since they were replaced by their strengthened versions.

As of 2009, the two most commonly used cryptographic hash functions were MD5 and SHA-1. However, a successful attack on MD5 broke Transport Layer Security in 2008.[13]

The United States National Security Agency (NSA) developed SHA-0 and SHA-1.

On 12 August 2004, Joux, Carribault, Lemuet, and Jalby announced a collision for the full SHA-0 algorithm. Joux et al. accomplished this using a generalization of the Chabaud and Joux attack. They found that the collision had complexity 251 and took about 80,000 CPU hours on a supercomputer with 256 Itanium 2 processors—equivalent to 13 days of full-time use of the supercomputer.

In February 2005, an attack on SHA-1 was reported that would find collision in about 269 hashing operations, rather than the 280 expected for a 160-bit hash function. In August 2005, another attack on SHA-1 was reported that would find collisions in 263 operations. Though theoretical weaknesses of SHA-1 exist,[14][15] no collision (or near-collision) has yet been found. Nonetheless, it is often suggested that it may be practical to break within years, and that new applications can avoid these problems by using later members of the SHA family, such as SHA-2, or using techniques such as randomized hashing[16][17] that do not require collision resistance.

However, to ensure the long-term robustness of applications that use hash functions, there was a competition to design a replacement for SHA-2. On October 2, 2012, Keccak was selected as the winner of the NIST hash function competition. A version of this algorithm became a FIPS standard on August 5, 2015 under the name SHA-3.[18]

Another finalist from the NIST hash function competition, BLAKE, was optimized to produce BLAKE2 which is notable for being faster than SHA-3, SHA-2, SHA-1, or MD5, and is used in numerous applications and libraries.

See also

|

|

References

- ↑ Schneier, Bruce. "Cryptanalysis of MD5 and SHA: Time for a New Standard". Computerworld. Retrieved 2016-04-20.

Much more than encryption algorithms, one-way hash functions are the workhorses of modern cryptography.

- ↑ Katz, Jonathan and Lindell, Yehuda (2008). Introduction to Modern Cryptography. Chapman & Hall/CRC.

- ↑ Rogaway & Shrimpton 2004, in Sec. 5. Implications.

- ↑ "Flickr's API Signature Forgery Vulnerability". Thai Duong and Juliano Rizzo.

- ↑ Lyubashevsky, Vadim and Micciancio, Daniele and Peikert, Chris and Rosen, Alon. "SWIFFT: A Modest Proposal for FFT Hashing". Springer. Retrieved 29 August 2016.

- ↑ Perrin, Chad (December 5, 2007). "Use MD5 hashes to verify software downloads". TechRepublic. Retrieved March 2, 2013.

- ↑ "Password Hashing Competition". Retrieved March 3, 2013.

- ↑ Florian Mendel; Christian Rechberger; Martin Schläffer. "MD5 is Weaker than Weak: Attacks on Concatenated Combiners". "Advances in Cryptology - ASIACRYPT 2009". p. 145. quote: 'Concatenating ... is often used by implementors to "hedge bets" on hash functions. A combiner of the form MD5||SHA-1 as used in SSL3.0/TLS1.0 ... is an example of such a strategy.'

- ↑ Danny Harnik; Joe Kilian; Moni Naor; Omer Reingold; Alon Rosen. "On Robust Combiners for Oblivious Transfer and Other Primitives". "Advances in Cryptology - EUROCRYPT 2005". quote: "the concatenation of hash functions as suggested in the TLS... is guaranteed to be as secure as the candidate that remains secure." p. 99.

- ↑ Antoine Joux. Multicollisions in Iterated Hash Functions. Application to Cascaded Constructions. LNCS 3152/2004, pages 306–316 Full text.

- ↑ Finney, Hal (August 20, 2004). "More Problems with Hash Functions". The Cryptography Mailing List. Retrieved May 25, 2016.

- ↑ Hoch, Jonathan J.; Shamir, Adi (2008). "On the Strength of the Concatenated Hash Combiner when All the Hash Functions Are Weak" (PDF). Retrieved May 25, 2016.

- ↑ Alexander Sotirov, Marc Stevens, Jacob Appelbaum, Arjen Lenstra, David Molnar, Dag Arne Osvik, Benne de Weger, MD5 considered harmful today: Creating a rogue CA certificate, accessed March 29, 2009.

- ↑ Xiaoyun Wang, Yiqun Lisa Yin, and Hongbo Yu, Finding Collisions in the Full SHA-1

- ↑ Bruce Schneier, Cryptanalysis of SHA-1 (summarizes Wang et al. results and their implications)

- ↑ Shai Halevi, Hugo Krawczyk, Update on Randomized Hashing

- ↑ Shai Halevi and Hugo Krawczyk, Randomized Hashing and Digital Signatures

- ↑ NIST.gov – Computer Security Division – Computer Security Resource Center

External links

- Paar, Christof; Pelzl, Jan (2009). "11: Hash Functions". Understanding Cryptography, A Textbook for Students and Practitioners. Springer. (companion web site contains online cryptography course that covers hash functions)

- "The ECRYPT Hash Function Website".

- Buldas, A. (2011). "Series of mini-lectures about cryptographic hash functions".

- Rogaway, P.; Shrimpton, T. (2004). "Cryptographic Hash-Function Basics: Definitions, Implications, and Separations for Preimage Resistance, Second-Preimage Resistance, and Collision Resistance". CiteSeerX 10.1.1.3.6200

.

.