Year 2000 problem

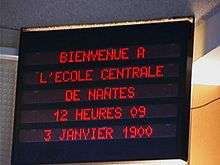

The Year 2000 problem is also known as the Y2K problem, the Millennium bug, the Y2K bug, or Y2K. Problems arose because programmers represented the four-digit year with only the final two digits. This made the year 2000 indistinguishable from 1900. The assumption that a twentieth-century date was always understood caused various errors, such as the incorrect display of dates, and the inaccurate ordering of automated dated records or real-time events.

In 1997, the British Standards Institute (BSI) developed a standard, DISC PD2000-1,[1] which defines "Year 2000 Conformity requirements" as four rules: No valid date will cause any interruption in operations. Calculation of durations between, or the sequence of, pairs of dates will be correct whether any dates are in different centuries. In all interfaces and in all storage, the century must be unambiguous, either specified, or calculable by algorithm. Year 2000 must be recognised as a leap year. It identifies two problems that may exist in many computer programs.

First, the practice of representing the year with two digits became problematic with logical error(s) arising upon "rollover" from x99 to x00. This had caused some date-related processing to operate incorrectly for dates and times on and after 1 January 2000, and on other critical dates which were billed "event horizons". Without corrective action, long-working systems would break down when the "... 97, 98, 99, 00 ..." ascending numbering assumption suddenly became invalid.

Secondly, some programmers had misunderstood the Gregorian calendar rule that determines whether years that are exactly divisible by 100 are not leap years, and assumed the year 2000 would not be a leap year. Years divisible by 100 are not leap years, except for years that are divisible by 400. Thus the year 2000 was a leap year.

Companies and organisations worldwide checked, fixed, and upgraded their computer systems.[2]

The number of computer failures that occurred when the clocks rolled over into 2000 in spite of remedial work is not known; among other reasons is the reluctance of organisations to report problems.[3]

Background

Y2K is a numeronym and was the common abbreviation for the year 2000 software problem. The abbreviation combines the letter Y for "year", and k for the SI unit prefix kilo meaning 1000; hence, 2K signifies 2000. It was also named the Millennium Bug because it was associated with the popular (rather than literal) roll-over of the millennium, even though the problem could have occurred at the end of any ordinary century.

The Year 2000 problem was the subject of the early book, Computers in Crisis by Jerome and Marilyn Murray (Petrocelli, 1984; reissued by McGraw-Hill under the title The Year 2000 Computing Crisis in 1996). The first recorded mention of the Year 2000 Problem on a Usenet newsgroup occurred on Friday, 18 January 1985, by Usenet poster Spencer Bolles.[4]

The acronym Y2K has been attributed to David Eddy, a Massachusetts programmer,[5] in an e-mail sent on 12 June 1995. He later said, "People were calling it CDC (Century Date Change), FADL (Faulty Date Logic) and other names."

The problem started because on both mainframe computers and later personal computers, storage was expensive, from as low as $10 per kilobyte, to in many cases as much as or even more than US$100 per kilobyte.[6] It was therefore very important for programmers to reduce usage. Since programs could simply prefix "19" to the year of a date, most programs internally used, or stored on disc or tape, data files where the date format was six digits, in the form MMDDYY, MM as two digits for the month, DD as two digits for the day, and YY as two digits for the year. As space on disc and tape was also expensive, this also saved money by reducing the size of stored data files and data bases.

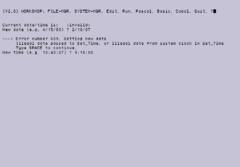

Many computer programs stored years with only two decimal digits; for example, 1980 was stored as 80. Some such programs could not distinguish between the year 2000 and the year 1900. Other programs tried to represent the year 2000 as 19100. This could cause a complete failure and cause date comparisons to produce incorrect results. Some embedded systems, making use of similar date logic, were expected to fail and cause utilities and other crucial infrastructure to fail.

Some warnings of what would happen if nothing was done were particularly dire:

The Y2K problem is the electronic equivalent of the El Niño and there will be nasty surprises around the globe. — John Hamre, United States Deputy Secretary of Defense[7]

Special committees were set up by governments to monitor remedial work and contingency planning, particularly by crucial infrastructures such as telecommunications, utilities and the like, to ensure that the most critical services had fixed their own problems and were prepared for problems with others. While some commentators and experts argued that the coverage of the problem largely amounted to scaremongering,[8] it was only the safe passing of the main "event horizon" itself, 1 January 2000, that fully quelled public fears. Some experts who argued that scaremongering was occurring, such as Ross Anderson, Professor of Security Engineering at the University of Cambridge Computer Laboratory, have since claimed that despite sending out hundreds of press releases about research results suggesting that the problem was not likely to be as big a problem as some had suggested, they were largely ignored by the media.[8]

Programming problem

The practice of using two-digit dates for convenience predates computers, but was never a problem until stored dates were used in calculations.

The need for bit conservation

"I'm one of the culprits who created this problem. I used to write those programs back in the 1960s and 1970s, and was proud of the fact that I was able to squeeze a few elements of space out of my program by not having to put a 19 before the year. Back then, it was very important. We used to spend a lot of time running through various mathematical exercises before we started to write our programs so that they could be very clearly delimited with respect to space and the use of capacity. It never entered our minds that those programs would have lasted for more than a few years. As a consequence, they are very poorly documented. If I were to go back and look at some of the programs I wrote 30 years ago, I would have one terribly difficult time working my way through step-by-step."

—Alan Greenspan, 1998[9]

In the first half of the 20th century, well before the computer era, business data processing was done using unit record equipment and punched cards, most commonly the 80-column variety employed by IBM, which dominated the industry. Many tricks were used to squeeze needed data into fixed-field 80-character records. Saving two digits for every date field was significant in this effort.

In the 1960s, computer memory and mass storage were scarce and expensive. Early core memory cost one dollar per bit. Popular commercial computers, such as the IBM 1401, shipped with as little as 2 kilobytes of memory. Programs often mimicked card processing techniques. Commercial programming languages of the time, such as COBOL and RPG, processed numbers in their character representations. Over time the punched cards were converted to magnetic tape and then disc files, but the structure of the data usually changed very little. Data was still input using punched cards until the mid-1970s. Machine architectures, programming languages and application designs were evolving rapidly. Neither managers nor programmers of that time expected their programs to remain in use for many decades. The realisation that databases were a new type of program with different characteristics had not yet come.

There were exceptions, of course. The first person known to publicly address this issue was Bob Bemer, who had noticed it in 1958 as a result of work on genealogical software. He spent the next twenty years trying to make programmers, IBM, the government of the United States and the ISO aware of the problem, with little result. This included the recommendation that the COBOL PICTURE clause should be used to specify four digit years for dates.[10] Despite magazine articles on the subject from 1970 onward, the majority of programmers and managers only started recognising Y2K as a looming problem in the mid-1990s, but even then, inertia and complacency caused it to be mostly unresolved until the last few years of the decade. In 1989, Erik Naggum was instrumental in ensuring that internet mail used four digit representations of years by including a strong recommendation to this effect in the internet host requirements document RFC 1123.[11]

Saving space on stored dates persisted into the Unix era, with most systems representing dates to a single 32-bit word, typically representing dates as elapsed seconds from some fixed date.

Resulting bugs from date programming

Storage of a combined date and time within a fixed binary field is often considered a solution, but the possibility for software to misinterpret dates remains because such date and time representations must be relative to some known origin. Rollover of such systems is still a problem but can happen at varying dates and can fail in various ways. For example:

- The Microsoft Excel spreadsheet program had a very elementary Y2K problem: Excel (in both Windows and Mac versions, when they are set to start at 1900) incorrectly set the year 1900 as a leap year for compatibility with Lotus 1-2-3.[12] In addition, the years 2100, 2200, and so on, were regarded as leap years. This bug was fixed in later versions, but since the epoch of the Excel timestamp was set to the meaningless date of 0 January 1900 in previous versions, the year 1900 is still regarded as a leap year to maintain backward compatibility.

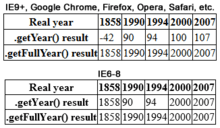

- In the C programming language, the standard library function to extract the year from a timestamp returns the year minus 1900. Many programs using functions from C, such as Perl and Java, two programming languages widely used in web development, incorrectly treated this value as the last two digits of the year. On the web this was usually a harmless presentation bug, but it did cause many dynamically generated web pages to display 1 January 2000 as "1/1/19100", "1/1/100", or other variants, depending on the display format.

- JavaScript was changed due to concerns over the Y2K bug, and the return value for years changed and thus differed between versions from sometimes being a four digit representation and sometimes a two-digit representation forcing programmers to rewrite already working code to make sure web pages worked for all versions.[13][14]

- Older applications written for the commonly used UNIX Source Code Control System failed to handle years that began with the digit "2".

- In the Windows 3.x file manager, dates displayed as 1/1/19:0 for 1/1/2000 (because the colon is the character after "9" in the ASCII character set). An update was available.

- Some software, such as Math Blaster Episode I: In Search of Spot[15] which only treats years as two-digit values instead of four, will give a given year as "1900", "1901", and so on, depending on the last two digits of the present year.

Date bugs similar to Y2K

4 January 1975

This date overflowed the 12-bit field that had been used in the Decsystem 10 operating systems. There were numerous problems and crashes related to this bug while an alternative format was developed.[16]

9 September 1999

Even before 1 January 2000 arrived, there were also some worries about 9 September 1999 (albeit less than those generated by Y2K). Because this date could also be written in the numeric format 9/9/99, it could have conflicted with the date value 9999, frequently used to specify an unknown date. It was thus possible that database programs might act on the records containing unknown dates on that day. Data entry operators commonly entered 9999 into required fields for an unknown future date, (e.g., a termination date for cable television or telephone service), in order to process computer forms using CICS software.[17] Somewhat similar to this is the end-of-file code 9999, used in older programming languages. While fears arose that some programs might unexpectedly terminate on that date, the bug was more likely to confuse computer operators than machines.

Leap years

Mostly, a year is a leap year if it is evenly divisible by four. A year divisible by 100, however, is not a leap year in the Gregorian calendar unless it is also divisible by 400. For example, 1600 was a leap year, but 1700, 1800 and 1900 were not. Some programs may have relied on the oversimplified rule that a year divisible by four is a leap year. This method works fine for the year 2000 (because it is a leap year), and will not become a problem until 2100, when older legacy programs will likely have long since been replaced. Other programs contained incorrect leap year logic, assuming for instance that no year divisible by 100 could be a leap year. An assessment of this leap year problem including a number of real life code fragments appeared in 1998.[18] For information on why century years are treated differently, see Gregorian calendar.

Year 2010 problem

Some systems had problems once the year rolled over to 2010. This was dubbed by some in the media as the "Y2K+10" or "Y2.01K" problem.[19]

The main source of problems was confusion between hexadecimal number encoding and binary-coded decimal encodings of numbers. Both hexadecimal and BCD encode the numbers 0–9 as 0x0–0x9. But BCD encodes the number 10 as 0x10, whereas hexadecimal encodes the number 10 as 0x0A; 0x10 interpreted as a hexadecimal encoding represents the number 16.

For example, because the SMS protocol uses BCD for dates, some mobile phone software incorrectly reported dates of SMSes as 2016 instead of 2010. Windows Mobile is the first software reported to have been affected by this glitch; in some cases WM6 changes the date of any incoming SMS message sent after 1 January 2010 from the year "2010" to "2016".[20][21]

Other systems affected include EFTPOS terminals,[22] and the PlayStation 3 (except the Slim model).[23]

The most important occurrences of such a glitch were in Germany, where upwards of 20 million bank cards became unusable, and with Citibank Belgium, whose digipass customer identification chips failed.[24]

Year 2038 problem

The original Unix time datatype (time_t) stores a date and time as a signed long integer (on 32 bit systems a 32-bit integer) representing the number of seconds since 1 January 1970. During and after 2038, this number will exceed 231 − 1, the largest number representable by a signed long integer on 32 bit systems, causing the Year 2038 problem (also known as the Unix Millennium bug or Y2K38). As a long integer in 64 bit systems uses 64 bits, the problem does not realistically exist on 64 bit systems that use the LP64 model.

Programming solutions

Several very different approaches were used to solve the Year 2000 problem in legacy systems. Three of them follow:

- Date expansion

- Two-digit years were expanded to include the century (becoming four-digit years) in programs, files, and databases. This was considered the "purest" solution, resulting in unambiguous dates that are permanent and easy to maintain. However, this method was costly, requiring massive testing and conversion efforts, and usually affecting entire systems.

- Date re-partitioning

- In legacy databases whose size could not be economically changed, six-digit year/month/day codes were converted to three-digit years (with 1999 represented as 099 and 2001 represented as 101, etc.) and three-digit days (ordinal date in year). Only input and output instructions for the date fields had to be modified, but most other date operations and whole record operations required no change. This delays the eventual roll-over problem to the end of the year 2899.

- Windowing

- Two-digit years were retained, and programs determined the century value only when needed for particular functions, such as date comparisons and calculations. (The century "window" refers to the 100-year period to which a date belongs). This technique, which required installing small patches of code into programs, was simpler to test and implement than date expansion, thus much less costly. While not a permanent solution, windowing fixes were usually designed to work for several decades. This was thought acceptable, as older legacy systems tend to eventually get replaced by newer technology.[25]

Documented errors

Before 2000

- On 28 December 1999, 10,000 card swipe machines issued by HSBC and manufactured by Racal stopped processing credit and debit card transactions.[8] The stores relied on paper transactions until the machines started working again on 1 January.[26]

On 1 January 2000

When 1 January 2000 arrived, there were problems generally regarded as minor. Consequences did not always result precisely at midnight. Some programs were not active at that moment and would only show up when they were invoked. Not all problems recorded were directly linked to Y2K programming in a causality; minor technological glitches occur on a regular basis. Some caused erroneous results, some caused machines to stop working, some caused date errors, and two caused malfunctions.

Reported problems include:

- In Sheffield, United Kingdom, incorrect Down syndrome test results were sent to 154 pregnant women and two abortions were carried out as a direct result of a Y2K bug. Four babies with Down syndrome were also born to mothers who had been told they were in the low-risk group.[27]

- In Ishikawa, Japan, radiation-monitoring equipment failed at midnight; however, officials stated there was no risk to the public.[28]

- In Onagawa, Japan, an alarm sounded at a nuclear power plant at two minutes after midnight.[28]

- In Japan, at two minutes past midnight, Osaka Media Port, a telecommunications carrier, found errors in the date management part of the company's network. The problem was fixed by 02:43 and no services were disrupted.[29]

- In Japan, NTT Mobile Communications Network (NTT DoCoMo), Japan's largest cellular operator, reported on 1 January 2000, that some models of mobile telephones were deleting new messages received, rather than the older messages, as the memory filled up.[29]

- In Australia, bus ticket validation machines in two states failed to operate.[30]

- In the United States, 150 slot machines at race tracks in Delaware stopped working.[30]

- In the United States, the US Naval Observatory, which runs the master clock that keeps the country's official time, gave the date on its website as 1 Jan 19100.[31]

- In France, the national weather forecasting service, Meteo France, said a Y2K bug made the date on a webpage show a map with Saturday's weather forecast as "01/01/19100".[30] This also occurred on other websites, including att.net, at the time a general-purpose portal site primarily for AT&T Worldnet customers in the United States.

On 1 March 2000

Problems were reported but these were mostly minor.[32]

- In Japan, around five percent of post office cash dispensers failed to work.

- In Japan, data from weather bureau computers was corrupted.

- In the United States, the Coast Guard's message processing system was affected.

- At Offut Air Force Base south of Omaha, Nebraska records of aircraft maintenance parts could not be accessed.

- At Reagan National Airport, Washington, D C check - in lines lengthened after baggage handling programs were affected.

- In Bulgaria police documents were issued with expiry dates of 29 February 2005 and 29 February 2010 (which are not leap years) and the system defaulted to 1900.[33]

On 31 December 2000 or 1 January 2001

Some software did not correctly recognise 2000 as a leap year, and so worked on the basis of the year having 365 days. On the last day of 2000 (day 366) these systems exhibited various errors. These were generally minor, apart from reports of some Norwegian trains that were delayed until their clocks were put back by a month.[34]

Government responses

Bulgaria

Although only two digits are allocated for the birth year in the Bulgarian national identification number, the year 1900 problem and subsequently the Y2K problem were addressed by the use of unused values above 12 in the month range. For all persons born before 1900, the month is stored as the calendar month plus 20, and for all persons born after 1999, the month is stored as the calendar month plus 40.[35]

Netherlands

The Dutch Government promoted Y2K Information Sharing and Analysis Centers (ISACs) to share readiness between industries, without threat of antitrust violations or liability based on information shared.

Norway and Finland

Norway and Finland changed their national identification number, to indicate the century in which a person was born. In both countries, the birth year was historically indicated by two digits only. This numbering system had already given rise to a similar problem, the "Year 1900 problem", which arose due to problems distinguishing between people born in the 20th and 19th centuries. Y2K fears drew attention to an older issue, while prompting a solution to a new problem. In Finland, the problem was solved by replacing the hyphen ("-") in the number with the letter "A" for people born in the 21st century. In Norway, the range of the individual numbers following the birth date was altered from 0–499 to 500–999.

Uganda

The Ugandan government responded to the Y2K threat by setting up a Y2K Task Force.[36] In August 1999 an independent international assessment by the World Bank International Y2k Cooperation Centre found that Uganda's website was in the top category as "highly informative". This put Uganda in the "top 20" out of 107 national governments, and on a par with the United States, United Kingdom, Canada, Australia and Japan, and ahead of Germany, Italy, Austria, Switzerland which were rated as only "somewhat informative". The report said that "Countries which disclose more Y2k information will be more likely to maintain public confidence in their own countries and in the international markets."[37]

United States

In 1998, the United States government responded to the Y2K threat by passing the Year 2000 Information and Readiness Disclosure Act, by working with private sector counterparts in order to ensure readiness, and by creating internal continuity of operations plans in the event of problems. The effort was coordinated out of the White House by the President's Council on Year 2000 Conversion, headed by John Koskinen.[38] The White House effort was conducted in co-ordination with the then-independent Federal Emergency Management Agency (FEMA), and an interim Critical Infrastructure Protection Group, then in the Department of Justice, now in Homeland Security.

The US Government followed a three-part approach to the problem: (1) Outreach and Advocacy (2) Monitoring and Assessment and (3) Contingency Planning and Regulation.[39]

A feature of US Government outreach was Y2K websites including Y2K.GOV. Presently, many US Government agencies have taken down their Y2K websites. Some of these documents may be available through National Archives and Records Administration[40] or the Wayback Machine.

Each federal agency had its own Y2K task force which worked with its private sector counterparts. The FCC had the FCC Year 2000 Task Force.[39][41]

Most industries had contingency plans that relied upon the internet for backup communications. However, as no federal agency had clear authority with regard to the internet at this time (it had passed from the US Department of Defense to the US National Science Foundation and then to the US Department of Commerce), no agency was assessing the readiness of the internet itself. Therefore, on 30 July 1999, the White House held the White House Internet Y2K Roundtable.[42]

United Kingdom

The British government made regular assessments of the progress made by different sectors of business towards becoming Y2K-compliant and there was wide reporting of sectors which were laggards. Companies and institutions were classified according to a traffic light scheme ranging from green "no problems" to red "grave doubts whether the work can be finished in time". Many organisations finished far ahead of the deadline.

International co-operation

The International Y2K Cooperation Center (IY2KCC) was established at the behest of national Y2K coordinators from over 120 countries when they met at the First Global Meeting of National Y2K Coordinators at the United Nations in December 1988. IY2KCC established an office in Washington, D.C. in March 1999. Funding was provided by the World Bank, and Bruce W. McConnell was appointed as director.

IY2KCC's mission was to "promote increased strategic cooperation and action among governments, peoples, and the private sector to minimize adverse Y2K effects on the global society and economy." Activities of IY2KCC were conducted in six areas:

- National Readiness: Promoting Y2K programs worldwide

- Regional Cooperation: Promoting and supporting co-ordination within defined geographic areas

- Sector Cooperation: Promoting and supporting co-ordination within and across defined economic sectors

- Continuity and Response Cooperation: Promoting and supporting co-ordination to ensure essential services and provisions for emergency response

- Information Cooperation: Promoting and supporting international information sharing and publicity

- Facilitation and Assistance: Organizing global meetings of Y2K coordinators and to identify resources

IY2KCC closed down in March 2000.[43]

Private sector response

- The United States established the Year 2000 Information and Readiness Disclosure Act, which limited the liability of businesses who had properly disclosed their Y2K readiness.

- Insurance companies sold insurance policies covering failure of businesses due to Y2K problems.

- Attorneys organised and mobilised for Y2K class action lawsuits (which were not pursued).

- Survivalist-related businesses (gun dealers, surplus and sporting goods) anticipated increased business in the final months of 1999 in an event known as the Y2K scare.[44]

- The Long Now Foundation, which (in their words) "seeks to promote 'slower/better' thinking and to foster creativity in the framework of the next 10,000 years", has a policy of anticipating the Year 10,000 problem by writing all years with five digits. For example, they list "01996" as their year of founding.

- While there was no one comprehensive internet Y2K effort, multiple internet trade associations and organisations banded together to form the Internet Year 2000 Campaign.[45] This effort partnered with the White House's Internet Y2K Roundtable.

The Y2K issue was a major topic of discussion in the late 1990s and as such showed up in most popular media. A number of "Y2K disaster" books were published such as Deadline Y2K by Mark Joseph. Movies such as Y2K: Year to Kill capitalised on the currency of Y2K, as did numerous TV shows, comic strips, and computer games.

Fringe group responses

A variety of fringe groups and individuals such as those within some fundamentalist religious organizations, survivalists, cults, anti-social movements, self-sufficiency enthusiasts, communes and others attracted to conspiracy theories, embraced Y2K as a tool to engender fear and provide a form of evidence for their respective theories. End-of-the-world scenarios and apocalyptic themes were common in their communication.

Interest in the survivalist movement peaked in 1999 in its second wave for that decade, triggered by Y2K fears. In the time before extensive efforts were made to rewrite computer programming codes to mitigate the possible impacts, some writers such as Gary North, Ed Yourdon, James Howard Kunstler,[46] and Ed Yardeni anticipated widespread power outages, food and gasoline shortages, and other emergencies. North and others raised the alarm because they thought Y2K code fixes were not being made quickly enough. While a range of authors responded to this wave of concern, two of the most survival-focused texts to emerge were Boston on Y2K (1998) by Kenneth W. Royce, and Mike Oehler's The Hippy Survival Guide to Y2K.

Y2K was also exploited by some prominent and other lesser known fundamentalist and Pentecostal Christian leaders throughout the Western world, particularly in North America and Australia.[47] Their promotion of the perceived risks of Y2K was combined with end times thinking and apocalyptic prophecies in an attempt to influence followers.[47] The New York Times reported in late 1999, "The Rev. Jerry Falwell suggested that Y2K would be the confirmation of Christian prophecy - God's instrument to shake this nation, to humble this nation. The Y2K crisis might incite a worldwide revival that would lead to the rapture of the church. Along with many survivalists, Mr. Falwell advised stocking up on food and guns".[48] Adherents in these movements were encouraged to engage in food hoarding, take lessons in self-sufficiency, and the more extreme elements planned for a total collapse of modern society. The Chicago Tribune reported that some large fundamentalist churches, motivated by Y2K, were the sites for flea market-like sales of paraphernalia designed to help people survive a social order crisis ranging from gold coins to wood-burning stoves.[49] Betsy Hart, writing for the Deseret News reported that a lot of the more extreme evangelicals used Y2K to promote a political agenda in which downfall of the government was a desired outcome in order to usher in Christ's reign. She also noted that, "the cold truth is that preaching chaos is profitable and calm doesn't sell many tapes or books"[50] These types of fears and conspiracies were described dramatically by New Zealand-based Christian prophetic author and preacher Barry Smith in his publication, "I Spy with my Little Eye", where he dedicated a whole chapter to Y2K.[51] Some expected, at times through so-called prophecies, that Y2K would be the beginning of a worldwide Christian revival.[52] It became clear in the aftermath that leaders of these fringe groups had cleverly used fears of apocalyptic outcomes to manipulate followers into dramatic scenes of mass repentance or renewed commitment to their groups, additional giving of funds and more overt commitment to their respective organizations or churches. The Baltimore Sun noted this in their article, "Apocalypse Now - Y2K spurs fears", where they reported the increased call for repentance in the populace in order to avoid God's wrath.[53] Christian leader, Col Stringer, in his commentary has published, "Fear-creating writers sold over 45 million books citing every conceivable catastrophe from civil war, planes dropping from the sky to the end of the civilised world as we know it. Reputable preachers were advocating food storage and a "head for the caves" mentality. No banks failed, no planes crashed, no wars or civil war started. And yet not one of these prophets of doom has ever apologised for their scare-mongering tactics."[52] Some prominent North American Christian ministries and leaders generated huge personal and corporate profits through sales of Y2K preparation kits, generators, survival guides, published prophecies and a wide range of other associated merchandise. Christian journalist, Rob Boston, has documented this[47] in his article "False Prophets, Real Profits Religious Right Leaders' Wild Predictions of Y2K Disaster Didn't Come True, But They Made Money Anyway".

Cost

The total cost of the work done in preparation for Y2K is estimated at over US$300 billion ($413 billion today, once inflation is taken into account).[54][55] IDC calculated that the US spent an estimated $134 billion ($184 billion) preparing for Y2K, and another $13 billion ($18 billion) fixing problems in 2000 and 2001. Worldwide, $308 billion ($424 billion) was estimated to have been spent on Y2K remediation.[56] There are two ways to view the events of 2000 from the perspective of its aftermath:

Supporting view

This view holds that the vast majority of problems had been fixed correctly, and the money was well spent. The situation was essentially one of preemptive alarm. Those who hold this view claim that the lack of problems at the date change reflects the completeness of the project, and that many computer applications would not have continued to function into the 21st century without correction or remediation.

- Expected problems that were not seen by small businesses and small organisations were in fact prevented by Y2K fixes embedded in routine updates to operating system and utility software that were applied several years before 31 December 1999.

- The extent to which larger industry and government fixes averted issues that would have more significant impacts had they not been fixed, were typically not disclosed or widely reported.[57]

- It has also been suggested that on 11 September 2001, the New York infrastructure (including subways, phone service, and financial transactions) were able to continue operation because of the redundant networks established in the event of Y2K bug impact[58] and the contingency plans devised by companies.[59] The terrorist attacks and the following prolonged blackout to lower Manhattan had minimal effect on global banking systems. Backup systems were activated at various locations around the region, many of which had been established to deal with a possible complete failure of networks in the financial district on 31 December 1999.[60]

Opposing view

Others have asserted that there were no, or very few, critical problems to begin with. They also asserted that there would be only a few minor mistakes and that a "fix on failure" approach, would have been the most efficient and cost-effective way to solve these problems as they occurred.

- Countries such as South Korea and Italy invested little to nothing in Y2K remediation,[61] yet had the same negligible Y2K problems as countries that spent enormous sums of money.[62]

- The lack of Y2K-related problems in schools, many of which undertook little or no remediation effort. By 1 September 1999, only 28% of US schools had achieved compliance for mission critical systems, and a government report predicted that "Y2K failures could very well plague the computers used by schools to manage payrolls, student records, online curricula, and building safety systems".[63]

- The lack of Y2K-related problems in an estimated 1.5 million small businesses that undertook no remediation effort. On 3 January 2000 (the first weekday of the year), the Small Business Administration received an estimated 40 calls from businesses with computer problems, similar to the average. None of the problems were critical.[64]

- The absence of Y2K-related problems occurring before 1 January 2000, even though the 2000 financial year commenced in 1999 in many jurisdictions, and a wide range of forward-looking calculations involved dates in 2000 and later years. Estimates undertaken in the leadup to 2000 suggested that around 25% of all problems should have occurred before 2000.[65] Critics of large-scale remediation argued during 1999 that the absence of significant reported problems in non-compliant small firms was evidence that there had been, and would be, no serious problems needing to be fixed in any firm, and that the scale of the problem had therefore been severely overestimated.[66] However, this can be countered with the observation that large companies had significant problems requiring action, that Y2K programmes were fully aware of the variable timescale, and that they were working to a series of earlier target dates, rather than a single fixed target of 31 December 1999.[57]

See also

- Deep Impact, was lost when its internal clock reached exactly 232 one-tenth seconds since 2000 on 11 August 2013, 00:38:49.

- IPv4 address exhaustion, problems caused by the limited allocation size for numeric internet addresses

- ISO 8601, an international standard for representing dates and times, which mandates the use of (at least) four digits for the year

- Perpetual calendar, a calendar valid for many years, including before and after 2000

- Year 10,000 problem, about computer software that cannot accept five digit years

- YEAR2000, a configuration setting supported by some versions of DR-DOS to overcome Year 2000 BIOS bugs

- 512k day: an event in 2014, involving a software limitation in network routers.

References

- ↑ BSI Standard, on year 2000.

- ↑ Wired (25 February 2000). "Leap Day Tuesday Last Y2K Worry". Retrieved 16 October 2016.

- ↑ Carrington, Damian (4 January 2000). "Was Y2K bug a boost?". BBC News. Archived from the original on 22 April 2004. Retrieved 19 September 2009.

- ↑ Spencer Bolles. "Computer bugs in the year 2000". Newsgroup: net.bugs. Usenet: [email protected].

- ↑ American RadioWorks Y2K Notebook Problems – The Surprising Legacy of Y2K. Retrieved on 22 April 2007.

- ↑ A web search on images for "computer memory ads 1975" returns advertisements showing pricing for 8K of memory at $990 and 64K of memory at $1495.

- ↑ Looking at the Y2K bug, portal on CNN.com Archived 7 February 2006 at the Wayback Machine.

- 1 2 3 Presenter: Stephen Fry (2009-10-03). "In the beginning was the nerd". Archive on 4. BBC Radio 4.

- ↑ Testimony by Alan Greenspan, ex-Chairman of the Federal Reserve before the Senate Banking Committee, 25 February 1998, ISBN 978-0-16-057997-4

- ↑ "Key computer coding creator dies". The Washington Post. 25 June 2004. Retrieved 25 September 2011.

- ↑ Braden, Robert (ed.) (October 1989). "Requirements for Internet Hosts -- Application and Support". Internet Engineering Task Force. Retrieved 16 October 2016.

- ↑ Microsoft Support (17 December 2015). "Microsoft Knowledge Base article 214326". Retrieved 16 October 2016.

- ↑ "JavaScript Reference Javascript 1.2". Sun Microsystems. Retrieved 7 June 2009.

- ↑ "JavaScript Reference Javascript 1.3". Sun. Retrieved 7 June 2009.

- ↑ TVTropes. "Millennium Bug - Television Tropes & Idioms". Retrieved 16 October 2016.

- ↑ "The Risks Digest Volume 4: Issue 45". The Risks Digest.

- ↑ Stockton, J.R., "Critical and Significant Dates" Merlyn.

- ↑ A. van Deursen, "The Leap Year Problem" The Year/2000 Journal 2(4):65–70, July/August 1998.

- ↑ CRN (4 January 2010). "Bank of Queensland hit by "Y2.01k" glitch". Retrieved 16 October 2016.

- ↑ "Windows Mobile glitch dates 2010 texts 2016". 5 January 2010.

- ↑ "Windows Mobile phones suffer Y2K+10 bug". 4 January 2010.

- ↑ "Bank of Queensland vs Y2K – an update". 4 January 2010.

- ↑ "Error: 8001050F Takes Down PlayStation Network".

- ↑ RTL (5 January 2010). "2010 Bug in Germany" (in French). Retrieved 16 October 2016.

- ↑ "The Case for Windowing: Techniques That Buy 60 Years", article by Raymond B. Howard, Year/2000 Journal, Mar/Apr 1998.

- ↑ Millennium bug hits retailers, from BBC News, 29 December 1999.

- ↑ Martin Wainwright (13 September 2001). "NHS faces huge damages bill after millennium bug error". The Guardian. UK. Retrieved 25 September 2011.

The health service is facing big compensation claims after admitting yesterday that failure to spot a millennium bug computer error led to incorrect Down's syndrome test results being sent to 154 pregnant women. ...

- 1 2 Y2K bug fails to bite, from BBC News, 1 January 2000.

- 1 2 Computer problems hit three nuclear plants in Japan, report by Martyn Williams of CNN, 3 January 2000 Archived 7 December 2004 at the Wayback Machine.

- 1 2 3 "Minor bug problems arise". BBC News. British Broadcasting Corporation. Retrieved 4 December 2015.

- ↑ Preparation pays off; world reports only tiny Y2K glitches at the Wayback Machine (archive index), report by Marsha Walton and Miles O'Brien of CNN, 1 January 2000.

- ↑ Wired (29 February 2000). "HK Leap Year Free of Y2K Glitches". Retrieved 16 October 2016.

- ↑ Wired (1 March 2000). "Leap Day Had Its Glitches". Retrieved 16 October 2016.

- ↑ The last bite of the bug, report from BBC News, 5 January 2001.

- ↑ Iliana V. Kohler; Jordan Kaltchev; Mariana Dimova. "Integrated Information System for Demographic Statistics 'ESGRAON-TDS' in Bulgaria" (PDF). 6 Article 12. Demographic Research: 325–354.

- ↑ "Uganda National Y2k Task Force End-June 1999 Public Position Statement". 30 June 1999. Retrieved 11 January 2012.

- ↑ "Y2K Center urges more information on Y2K readiness". 3 August 1999. Retrieved 11 January 2012.

- ↑ DeBruce, Orlando; Jones, Jennifer (23 February 1999). "White House shifts Y2K focus to states". CNN. Retrieved 16 October 2016.

- 1 2 "FCC Y2K Communications Sector Report (March 1999) copy available at WUTC" (PDF). (1.66 MB)

- ↑ See President Clinton: Addressing the Y2K Problem, White House, 19 October 1998.

- ↑ "Federal Communications Commission Spearheads Oversight of the U.S. Communications Industries' Y2K Preparedness, Robert J Butler and Anne E Hoge, Wiley, Rein & Fielding September/October 1999". Opengroup. Archived from the original on 9 October 2008. Retrieved 16 October 2016.

- ↑ "Basic Internet Structures Expected to be Y2K Ready, Telecom News, NCS (1999 Issue 2)" (PDF). (799 KB)

- ↑ "Finding Aids at The University of Minnesota".

- ↑ "quetek.com". quetek.com. Retrieved 25 September 2011.

- ↑ Internet Year 2000 Campaign archived at Cybertelecom.

- ↑ Kunstler, Jim (1999). "My Y2K—A Personal Statement". Kunstler, Jim. Retrieved 12 December 2006.

- 1 2 3 "False Prophets, Real Profits - Americans United". Retrieved 9 November 2016.

- ↑ Dutton, D., 31 December 2009 New York Times, "Its Always the End of the World as we Know it"

- ↑ Coen, J., 1 March 1999, "Some Christians Fear End, It's just a day to others" Chicago Tribune

- ↑ Hart, B., 12 February 1999 Deseret News, "Christian Y2K Alarmists Irresponsible" Scripps Howard News Service

- ↑ Smith, B., 1999, I Spy with my Little Eye, MS Life Media, chapter 24 - Y2K Bug, http://www.barrysmith.org.nz/site/books/

- 1 2 "Col Stringer Ministries - Newsletter Vol.1 : No.4". Retrieved 9 November 2016.

- ↑ Rivera, J., 17 February 1999, "Apocalypse Now – Y2K spurs fears" , Baltimore sun

- ↑ Federal Reserve Bank of Minneapolis Community Development Project. "Consumer Price Index (estimate) 1800–". Federal Reserve Bank of Minneapolis. Retrieved October 21, 2016.

- ↑ Y2K: Overhyped and oversold?, report from BBC News, 6 January 2000.

- ↑ Robert L. Mitchell (28 December 2009). "Y2K: The good, the bad and the crazy". ComputerWorld.

- 1 2 James Christie, (12 January 2015), Y2K – why I know it was a real problem, 'Claro Testing Blog' (accessed 12 January 2015)

- ↑ Y2K readiness helped New York after 9/11, article by Lois Slavin of MIT News, 20 November 2002.

- ↑ "Finance & Development, March 2002 - September 11 and the U.S. Payment System". Finance and Development - F&D.

- ↑ Y2K readiness helped NYC on 9/11, article by Rae Zimmerman of MIT News, 19 November 2002.

- ↑ Dutton, Denis (31 December 2009), "It's Always the End of the World as We Know It", The New York Times.

- ↑ Smith, R. Jeffrey (4 January 2000), "Italy Swatted the Y2K Bug", The Washington Post.

- ↑ White House: Schools lag in Y2K readiness: President's Council sounds alarm over K-12 districts' preparations so far, article by Jonathan Levine of eSchool News, 1 September 1999.

- ↑ Hoover, Kent (9 January 2000), "Most small businesses win their Y2K gamble", Puget Sound Business Journal.

- ↑ Lights out? Y2K appears safe, article by Elizabeth Weise of USA Today, 14 February 1999.

- ↑ John Quiggin, (2 September 1999), Y2K bug may never bite, 'Australian Financial Review' (from The Internet Archive accessed 29 December 2009).

External links

-

The full text of How Long Until the Y2K Computer Problem? at Wikisource

The full text of How Long Until the Y2K Computer Problem? at Wikisource - Center for Y2K and Society Records, Charles Babbage Institute, University of Minnesota. Documents activities of Center for Y2K and Society (based in Washington DC) working with non-profit institutions and foundations to respond to possible societal impacts of the Y2K computer problem: helping the poor and vulnerable as well as protecting human health and the environment. Records donated by executive director, Norman L. Dean.

- International Y2K Cooperation Center Records, 1998–2000, Charles Babbage Institute, University of Minnesota. Collection contains the materials of the International Y2K Cooperation Center. Includes country reports, news clippings, country questionnaires, country telephone directories, background materials, audio visual materials and papers of Bruce W. McConnell, director of IY2KCC.

- Preparing for an Apocalypse: Y2K, Charles Babbage Institute, University of Minnesota. A web exhibit curated by Stephanie H. Crowe

- BBC: Y2K coverage

- In The Beginning There Was The Nerd – BBC Radio documentary about the history of computers and the millennium bug 10 years after using archival recordings.

- The Surprising Legacy Of Y2K – Radio documentary by American Public Media, on the history and legacy of the millennium bug five years on.

- The Yawn of a New Millennium

- CBC Digital Archives – The Eve of the Millennium

- How the UK coped with the millennium bug

- Time running out for PCs at big companies CNN