Wget

|

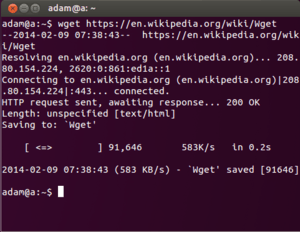

Screenshot of Wget running on Ubuntu and downloading this Wikipedia page about itself. | |

| Developer(s) | Giuseppe Scrivano, Hrvoje Nikšić |

|---|---|

| Initial release | January 1996 |

| Stable release |

1.18

/ 9 June 2016[1] |

| Repository |

git |

| Written in | C |

| Operating system | Cross-platform |

| Type | FTP client / HTTP client |

| License | GNU General Public License version 3 and later[2] |

| Website |

www |

GNU Wget (or just Wget, formerly Geturl, also written as its package name, wget) is a computer program that retrieves content from web servers. It is part of the GNU Project.

Its name derives from World Wide Web and get. It supports downloading via the HTTP, HTTPS, and FTP protocols.

Its features include recursive download, conversion of links for offline viewing of local HTML, and support for proxies. It appeared in 1996, coinciding with the boom of popularity of the Web, causing its wide use among Unix users and distribution with most major Linux distributions. Written in portable C, Wget can be easily installed on any Unix-like system. Programmers have ported Wget to many environments, including Microsoft Windows, Mac OS X, OpenVMS, HP-UX, MorphOS and AmigaOS. Since version 1.14 Wget has been able to save its output in the web archiving standard WARC format.[3]

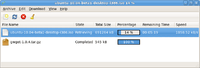

It has been used as the basis for graphical programs such as GWget for the GNOME Desktop.

History

Wget descends from an earlier program named Geturl by the same author,[4] the development of which commenced in late 1995. The name changed to Wget after the author became aware of an earlier Amiga program named GetURL, written by James Burton in AREXX.

Wget filled a gap in the web-downloading software available in the mid-1990s. No single program could reliably use both HTTP and FTP to download files. Existing programs either supported FTP (such as NcFTP and dl) or were written in Perl, which was not yet ubiquitous. While Wget was inspired by features of some of the existing programs, it supported both HTTP and FTP and could be built using only the standard development tools found on every Unix system.

At that time many Unix users struggled behind extremely slow university and dial-up Internet connections, leading to a growing need for a downloading agent that could deal with transient network failures without assistance from the human operator.

In 2010 US Army intelligence analyst PFC Chelsea Manning used Wget to download the 250,000 U.S. diplomatic cables and 500,000 Army reports that came to be known as the Iraq War logs and Afghan War logs sent to Wikileaks.[5]

Features

Robustness

Wget has been designed for robustness over slow or unstable network connections. If a download does not complete due to a network problem, Wget will automatically try to continue the download from where it left off, and repeat this until the whole file has been retrieved. It was one of the first clients to make use of the then-new Range HTTP header to support this feature.

Recursive download

Wget can optionally work like a web crawler by extracting resources linked from HTML pages and downloading them in sequence, repeating the process recursively until all the pages have been downloaded or a maximum recursion depth specified by the user has been reached. The downloaded pages are saved in a directory structure resembling that on the remote server. This "recursive download" enables partial or complete mirroring of web sites via HTTP. Links in downloaded HTML pages can be adjusted to point to locally downloaded material for offline viewing. When performing this kind of automatic mirroring of web sites, Wget supports the Robots Exclusion Standard (unless the option -e robots=off is used).

Recursive download works with FTP as well, where Wget issues the LIST command to find which additional files to download, repeating this process for directories and files under the one specified in the top URL. Shell-like wildcards are supported when the download of FTP URLs is requested.

When downloading recursively over either HTTP or FTP, Wget can be instructed to inspect the timestamps of local and remote files, and download only the remote files newer than the corresponding local ones. This allows easy mirroring of HTTP and FTP sites, but is considered inefficient and more error-prone when compared to programs designed for mirroring from the ground up, such as rsync. On the other hand, Wget doesn't require special server-side software for this task.

Non-interactiveness

Wget is non-interactive in the sense that, once started, it does not require user interaction and does not need to control a TTY, being able to log its progress to a separate file for later inspection. Users can start Wget and log off, leaving the program unattended. By contrast, most graphical or text user interface web browsers require the user to remain logged in and to manually restart failed downloads, which can be a great hindrance when transferring a lot of data.

Portability

Written in a highly portable style of C with minimal dependencies on third-party libraries, Wget requires little more than a C compiler and a BSD-like interface to TCP/IP networking. Designed as a Unix program invoked from the Unix shell, the program has been ported to numerous Unix-like environments and systems, including Microsoft Windows via Cygwin, and Mac OS X. It is also available as a native Microsoft Windows program as one of the GnuWin packages.

Other features

- Wget supports download through proxies, which are widely deployed to provide web access inside company firewalls and to cache and quickly deliver frequently accessed content.

- It makes use of persistent HTTP connections where available.

- IPv6 is supported on systems that include the appropriate interfaces.

- SSL/TLS is supported for encrypted downloads using the OpenSSL or GnuTLS library.

- Files larger than 2 GiB are supported on 32-bit systems that include the appropriate interfaces.

- Download speed may be throttled to avoid using up all of the available bandwidth.

- Can save its output in the web archiving standard WARC format, deduplicating from an associated CDX file as required.[3]

Using Wget

Basic usage

Typical usage of GNU Wget consists of invoking it from the command line, providing one or more URLs as arguments.

# Download the title page of example.com to a file

# named "index.html".

wget http://www.example.com/

# Download Wget's source code from the GNU ftp site.

wget ftp://ftp.gnu.org/pub/gnu/wget/wget-latest.tar.gz

More complex usage includes automatic download of multiple URLs into a directory hierarchy.

# Download *.gif from a website

# (globbing, like "wget http://www.server.com/dir/*.gif", only works with ftp)

wget -e robots=off -r -l 1 --no-parent -A .gif ftp://www.example.com/dir/

# Download the title page of example.com, along with

# the images and style sheets needed to display the page, and convert the

# URLs inside it to refer to locally available content.

wget -p -k http://www.example.com/

# Download the entire contents of example.com

wget -r -l 0 http://www.example.com/

Advanced examples

Download a mirror of the errata for a book you just purchased, follow all local links recursively and make the files suitable for off-line viewing. Use a random wait of up to 5 seconds between each file download and log the access results to "myLog.log". When there is a failure, retry for up to 7 times with 14 seconds between each retry. (The command must be on one line.)

wget -t 7 -w 5 --waitretry=14—random-wait -m -k -K -e robots=off

http://www.oreilly.com/catalog/upt3/errata/ -o ./myLog.log

Collect only specific links listed line by line in the local file "my_movies.txt". Use a random wait of 0 to 33 seconds between files, and use 512 kilobytes per second of bandwidth throttling. When there is a failure, retry for up to 22 times with 48 seconds between each retry. Send no tracking user agent or HTTP referer to a restrictive site and ignore robot exclusions. Place all the captured files in the local "movies" directory and collect the access results to the local file "my_movies.log". Good for downloading specific sets of files without hogging the network:

wget -t 22—waitretry=48—wait=33—random-wait—referer="" --user-agent=""

--limit-rate=512k -e robots=off -o ./my_movies.log -P ./movies -i ./my_movies.txt

Instead of an empty referer and user-agent use a real one that does not cause an “ERROR: 403 Forbidden” message from a restrictive site. It is also possible to create a .wgetrc file that holds some default values.[6] To get around cookie-tracked sessions:

# Using Wget to download content protected by referer and cookies.

# 1. Get a base URL and save its cookies in a file.

# 2. Get protected content using stored cookies.

wget --cookies=on --keep-session-cookies --save-cookies=cookie.txt http://first_page

wget --referer=http://first_page --cookies=on --load-cookies=cookie.txt

--keep-session-cookies --save-cookies=cookie.txt http://second_page

Mirror and convert CGI, ASP or PHP and others to HTML for offline browsing:

# Mirror website to a static copy for local browsing.

# This means all links will be changed to point to the local files.

# Note --html-extension will convert any CGI, ASP or PHP generated files to HTML (or anything else not .html).

wget --mirror -w 2 -p --html-extension --convert-links -P ${dir_prefix} http://www.yourdomain.com

Authors and copyright

GNU Wget was written by Hrvoje Nikšić with contributions by many other people, including Dan Harkless, Ian Abbott, and Mauro Tortonesi. Significant contributions are credited in the AUTHORS file included in the distribution, and all remaining ones are documented in the changelogs, also included with the program. Wget is currently maintained by Giuseppe Scrivano.[7]

The copyright to Wget belongs to the Free Software Foundation, whose policy is to require copyright assignments for all non-trivial contributions to GNU software.[8]

License

GNU Wget is distributed under the terms of the GNU General Public License, version 3 or later, with a special exception that allows distribution of binaries linked against the OpenSSL library. The text of the exception follows:[2]

Additional permission under GNU GPL version 3 section 7

If you modify this program, or any covered work, by linking or combining it with the OpenSSL project's OpenSSL library (or a modified version of that library), containing parts covered by the terms of the OpenSSL or SSLeay licenses, the Free Software Foundation grants you additional permission to convey the resulting work. Corresponding Source for a non-source form of such a combination shall include the source code for the parts of OpenSSL used as well as that of the covered work.

It is expected that the exception clause will be removed once Wget is modified to also link with the GnuTLS library.

Wget's documentation, in the form of a Texinfo reference manual, is distributed under the terms of the GNU Free Documentation License, version 1.2 or later. The man page usually distributed on Unix-like systems is automatically generated from a subset of the Texinfo manual and falls under the terms of the same license.

Development

Wget is developed in an open fashion, most of the design decisions typically being discussed on the public mailing list[9] followed by users and developers. Bug reports and patches are relayed to the same list.

Source contribution

The preferred method of contributing to Wget's code and documentation is through source updates in the form of textual patches generated by the diff utility. Patches intended for inclusion in Wget are submitted to the mailing list[9] where they are reviewed by the maintainers. Patches that pass the maintainers' scrutiny are installed in the sources. Instructions on patch creation as well as style guidelines are outlined on the project's wiki.[10]

The source code can also be tracked via a remote version control repository that hosts revision history beginning with the 1.5.3 release. The repository is currently running Git.[11] Prior to that, the source code had been hosted on (in reverse order): Bazaar,[12] Mercurial, Subversion, and via CVS.

Release

When a sufficient number of features or bug fixes accumulate during development, Wget is released to the general public via the GNU FTP site and its mirrors. Being entirely run by volunteers, there is no external pressure to issue a release nor are there enforceable release deadlines.

Releases are numbered as versions of the form of major.minor[.revision], such as Wget 1.11 or Wget 1.8.2. An increase of the major version number represents large and possibly incompatible changes in Wget's behavior or a radical redesign of the code base. An increase of the minor version number designates addition of new features and bug fixes. A new revision indicates a release that, compared to the previous revision, only contains bug fixes. Revision zero is omitted, meaning that for example Wget 1.11 is the same as 1.11.0. Wget does not use the odd-even release number convention popularized by Linux.

Popular references

Wget makes an appearance in the 2010 Columbia Pictures motion picture release, The Social Network. The lead character, loosely based on Facebook co-founder Mark Zuckerberg, uses Wget to aggregate student photos from various Harvard University housing-facility directories.

Notable releases

The following releases represent notable milestones in Wget's development. Features listed next to each release are edited for brevity and do not constitute comprehensive information about the release, which is available in the NEWS file distributed with Wget.[4]

- Geturl 1.0, released January 1996, was the first publicly available release. The first English-language announcement can be traced to a Usenet news posting, which probably refers to Geturl 1.3.4 released in June.[13]

- Wget 1.4.0, released November 1996, was the first version to use the name Wget. It was also the first release distributed under the terms of the GNU GPL, Geturl having been distributed under an ad-hoc no-warranty license.

- Wget 1.4.3, released February 1997, was the first version released as part of the GNU project with the copyright assigned to the FSF.

- Wget 1.5.3, released September 1998, was a milestone in the program's popularity. This version was bundled with many Linux based distributions, which exposed the program to a much wider audience.

- Wget 1.6, released December 1999, incorporated many bug fixes for the (by then stale) 1.5.3 release, largely thanks to the effort of Dan Harkless.

- Wget 1.7, released June 2001, introduced SSL support, cookies, and persistent connections.

- Wget 1.8, released December 2001, added bandwidth throttling, new progress indicators, and the breadth-first traversal of the hyperlink graph.

- Wget 1.9, released October 2003, included experimental IPv6 support, and ability to POST data to HTTP servers.

- Wget 1.10, released June 2005, introduced large file support, IPv6 support on dual-family systems, NTLM authorization, and SSL improvements. The maintainership was picked up by Mauro Tortonesi.

- Wget 1.11, released January 2008, moved to version 3 of the GNU General Public License, and added preliminary support for the

Content-Dispositionheader, which is often used by CGI scripts to indicate the name of a file for downloading. Security-related improvements were also made to the HTTP authentication code. Micah Cowan took over maintainership of the project. - Wget 1.12, released September 2009, added support for parsing URLs from CSS content on the web, and for handling Internationalized Resource Identifiers.

- Wget 1.13, released August 2011, supports HTTP/1.1, fixed some portability issues, and used the GnuTLS library by default for secure connections.[14]

- Wget 1.14, released August 2012, improved support for TLS and added support for RFC 2617 Digest Access Authentication.

- Wget 1.15, released January 2014, added—https-only and support for Perfect-Forward Secrecy.

- Wget 1.16, released October 2014, changed the default progress bar output, closed CVE-2014-4877, added support for libpsl to verify cookie domains, and introduced—start-pos to allow starting downloads from a specified position.

Related works

GWget

GWget is a free software graphical user interface for Wget. It is developed by David Sedeño Fernández and is part of the GNOME project. GWget supports all of the main features that Wget does, as well as parallel downloads.[15]

Cliget

Cliget is an open source Firefox addon downloader that uses Curl, Wget and Aria2. It is developed by Zaid Abdulla[16][17][18]

See also

References

- ↑ Scrivano, Giuseppe (2016-06-09). "GNU wget 1,17.1 Released". Free Software Foundation, Inc. Retrieved 2016-06-11.

- 1 2 "README file". Retrieved 2014-12-01.

- 1 2 Scrivano, Giuseppe (August 6, 2012). "GNU wget 1.14 released". GNU wget 1.14 released. Free Software Foundation, Inc. Retrieved February 25, 2016.

- 1 2 "GNU Wget NEWS – history of user-visible changes". Svn.dotsrc.org. 2005-03-20. Archived from the original on March 13, 2007. Retrieved 2012-12-08.

Wget 1.4.0 [formerly known as Geturl] is an extensive rewrite of Geturl.

- ↑ Sanger, David and Eric Schmitt (8 February 2014). "Snowden Used Low-Cost Tool to Best N.S.A.". NY Times. Retrieved 10 February 2014.

- ↑ Wget Trick to Download from Restrictive Sites

- ↑ "WgetMaintainer". 23 April 2010. Retrieved 20 June 2010.

- ↑ "Why the FSF gets copyright assignments from contributors - GNU Project - Free Software Foundation (FSF)". Gnu.org. Retrieved 2012-12-08.

- 1 2 "Gmane Loom". News.gmane.org. Retrieved 2012-12-08.

- ↑ "PatchGuidelines - The Wget Wgiki". Wget.addictivecode.org. 2009-09-22. Retrieved 2012-12-08.

- ↑ "RepositoryAccess". 31 July 2012. Retrieved 7 June 2013.

- ↑ "RepositoryAccess". 22 May 2010. Retrieved 20 June 2010.

- ↑ Niksic, Hrvoje (June 24, 1996). "Geturl: Software for non-interactive downloading". comp.infosystems.www.announce. Retrieved November 17, 2016.

- ↑ Wget NEWS file

- ↑ GWget Home Page

- ↑ "zaidka/cliget". GitHub. Retrieved 2016-08-25.

- ↑ "Meet the cliget Developer :: Add-ons for Firefox". addons.mozilla.org. Retrieved 2016-08-25.

- ↑ "cliget". addons.mozilla.org. Retrieved 2016-08-25.

External links

| Wikimedia Commons has media related to GNU Wget. |