Harmony search

In operations research, harmony search is a phenomenon-mimicking metaheuristic introduced in 2001 by Zong Woo Geem, Joong Hoon Kim, and G. V. Loganathan.[1] Harmony search is inspired by the improvisation process of jazz musicians.

Metaphor

In the HS algorithm, each musician (= decision variable) plays (= generates) a note (= a value) for finding a best harmony (= global optimum) all together.

Algorithm

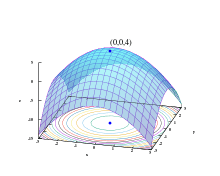

Harmony search tries to find a vector which optimizes (minimizes or maximizes) a certain objective function.

The algorithm has the following steps:

Step 1: Generate random vectors () as many as (harmony memory size), then store them in harmony memory (HM).

Step 2: Generate a new vector . For each component ,

- with probability (harmony memory considering rate; 0 ≤ ≤ 1), pick the stored value from HM:

- with probability , pick a random value within the allowed range.

Step 3: Perform additional work if the value in Step 2 came from HM.

- with probability (pitch adjusting rate; 0 ≤ ≤ 1), change by a small amount: or for discrete variable; or for continuous variable.

- with probability , do nothing.

Step 4: If is better than the worst vector in HM, replace with .

Step 5: Repeat from Step 2 to Step 4 until termination criterion (e.g. maximum iterations) is satisfied.

The parameters of the algorithm are

- = the size of the harmony memory. It generally varies from 1 to 100. (typical value = 30)

- = the rate of choosing a value from the harmony memory. It generally varies from 0.7 to 0.99. (typical value = 0.9)

- = the rate of choosing a neighboring value. It generally varies from 0.1 to 0.5. (typical value = 0.3)

- = the amount between two neighboring values in discrete candidate set.

- (fret width, formerly bandwidth) = the amount of maximum change in pitch adjustment. This can be (0.01 × allowed range) to (0.001 × allowed range).

It is possible to vary the parameter values as the search progresses, which gives an effect similar to simulated annealing.

Parameter-setting-free researches have been also performed. In the researches, algorithm users do not need tedious parameter setting process.

Criticism

Miriam Padberg has shown in 2011 that for binary optimization problems the Harmony Search algorithm is equivalent to a certain evolutionary algorithm.[2] In fact, the reasoning is similar to that used in the work of Weyland, but this time explicitly stated in a rigorous mathematical way.

Saka, Hasançebi and Geem have provided ample evidence to show Harmony Search is not a special case of (μ+1)-Differential Evolution.[3] The rebuttal in [3] starts by quoting the following paragraph from.[4]

"The HS algorithm uses the so called memory consideration with a probability of HMCR .In this case it sets the value of the decision variable to the value of the corresponding decision variable of uniformly selected solution in the HM, and performs a small modification of that value afterwards with a probability of PAR. Otherwise (with a probability of 1-HMCR), the value of the decision variable is set to a completely random value. The ES discussed here performs a population wide uniform crossover and therefore sets the value of each decision variable to the value of the corresponding decision variable of a uniformly selected solution in the current population. Then with probability p1 first mutation operator modifies the value of the decision variable slightly. After that a second mutation operator sets the value of the decision variable to a completely random value with probability p2. It is easy to see that the methods used to create the new solutions in both algorithms are equivalent. Given parameters HMCR and PAR for the HS algorithm ,we can set p1=PAR and p2= HMCR to obtain the same method of creating solutions for the ES. On the other hand, given parameters p1 and p2 for the ES,we can set HMCR=1-p2 and PAR=p1 and in this case we obtain the same method of creating solutions for the HS algorithm. This means that Harmony Search is a special case of the (μ+1) Evolution Strategy."

The rebuttal paragraph to the above claim is directly quoted from [3]

"After reading the above passage some questions arise. The first one is about existence of two parameters p1 and p2 in evolution strategies , as no mention is made about these parameters in the detailed algorithm presented above for (μ+1) -ES, which is quoted from very reliable sources ,i.e, Bäck and Schwefel,[5] Bäck.[6] On the other side, six publications are cited by Weyland [4] as a reference for realization of the mutation operator in (μ+1)-ES in conjunction with p1 and p2 parameters. The two of these publications, which are also repeated in this article from Refs. Schwefel,[7][8] are the published books on evolution strategies, whereas the other four; namely by Fogel and Atmar,[9] Michalewicz etal.,[10] BäckandSchwefel [11] Michalewicz [12] refer to some selected articles on evolutionary algorithms. Excluding Schwefel,[7] which is in German language, a detailed inspection concerning the other five publications turns up no evidence of the use of p1 and p2 parameters in any evolution strategy method. The inspection further reveals that none of these publications indeed addresses algorithmic formulation and details of (μ+1)-ES except by Rechenberg.[13] What is more, a literature survey on the use of parameters p1 and p2 in evolution strategies does not yield any information which verifies the use of these parameters. In this case one wonders how Weyland[4] has reached to the conclusion that evolution strategies inherently use these two parameters. The presence of any evolutionary computation method which uses p1 and p2 parameters in its search algorithm is out of the knowledge of the authors, but the fact remains that evolution strategies do not incorporate these two parameters in their search methodologies. It seems that these two parameters are misleadingly introduced as the standard components of (μ+1)-ES by Weyland [4] because presumably a stronger proof is needed to reinforce the assertion that harmony search algorithm is a special case of evolution strategies."

Despite the similarity between HS and (μ+1)-ES on some levels, there are a sufficient number of strong evidences to substantiate the fact that the two algorithms are completely different methods both conceptually and operationally. Firstly HS used Pitch adjustment probabilistic ally second, the local search strategies implemented by these techniques are completely dissimilar. In the harmony search method, if PAR test gives the answer of “yes,” the new value of a design variable is taken as the value available in the harmony matrix either one row up or down, or few rows up or down. On the other hand in evolution strategies the new value of a design variable is obtained by applying a normally distributed based mutation to the design variable. This mutation will not generate a value which may be in the one or more rows up or down. Naturally, the new value of a particular design variable will be different in this operation. Even if no mutation is applied to the design variable and one of the parent vectors is selected for the new design variable, this vector will be different from the one selected in the harmony search algorithm. This means harmony search method differs from the evolution strategies in its local search strategy.

References

- ↑ Geem, Zong Woo, Joong Hoon Kim, and G. V. Loganathan (2001). "A new heuristic optimization algorithm: harmony search." Simulation 76.2: pp. 60-68.

- ↑ Padberg, Miriam (2012). "Harmony Search Algorithms for binary optimization problems". Operations Research Proceedings. Operations Research Proceedings. 2011: 343–348. doi:10.1007/978-3-642-29210-1_55. ISBN 978-3-642-29209-5.

- 1 2 3 Saka, M. P.; Hasançebi, O.; Geem, Z. W. (2016-06-01). "Metaheuristics in structural optimization and discussions on harmony search algorithm". Swarm and Evolutionary Computation. 28: 88–97. doi:10.1016/j.swevo.2016.01.005.

- 1 2 3 4

- ↑ "Evolution strategies I: variants and their computational implementation". Proceedings of Genetic Algorithms in Engineering and Computer Science John Wiley & Sons,1995,pp.111–126.

- ↑ "Evolutionary Algorithms in Theory and Practice". Oxford University Press, New York, USA, 1996.

- 1 2 "Evolution als Strategie der experimentellen Forschung in der Strömungstechnik". Diplomarbeit, Technische Universität, Berlin, 1965.

- ↑ "H.P. Schwefel, Numerical Optimization of Computer Models,". John Wiley & Sons, USA, 1981.

- ↑ "Comparing genetic operators with Gaussian mutations in simulated evolutionary processes using linear systems". Biol.Cybern.63(2) (1990)111–114,Biol.Cybern.63(2) (1990)111–114.

- ↑ "Z. Michalewicz, C.Z.Janikow, J.B.Krawczyk, A modified genetic algorithm for optimal control problems". Comput.Math.Appl.23(12)(1992)83–94 D.

- ↑ "T.Bäck,H.Schwefel, An over view of evolutionary algorithms for parameter optimization". Evol.Comput.1(1)(1993)1–23.

- ↑ Z. Michalewicz, Genetic Algorithms + Data Structures. Springer,Berlin,1996.

- ↑ "Rechenberg, Evolutionsstrategie: Optimierung Technischer Systeme nach Prinzipien der Biologischen Evolution". Frommann-Holzboog, Stuttgart, 1973.

External links

- Harmony Search Algorithm by Zong Woo Geem